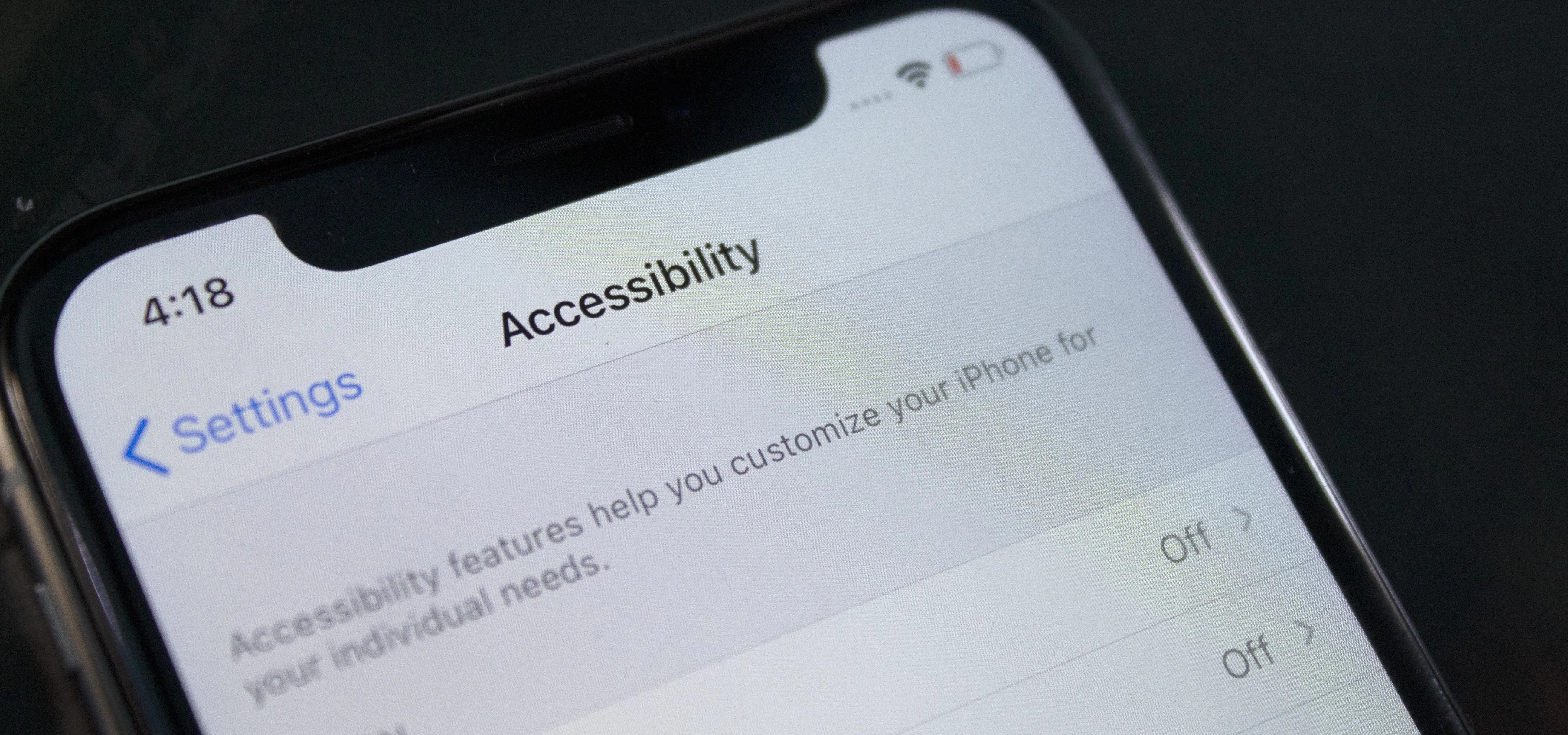

According to the CDC, one in four U.S. adults has a disability, where limitations can involve vision, cognitive function, hearing, motor skills, and more. That's why the iPhone has accessibility features; so that everyone can use an iPhone, not just those without any impairments. Apple's iOS 14 has only made the iPhone even more accessible, and the new tools benefit everyone, not just those that need them.

Without certain accessibility options, a sizeable portion of the population would not be able to use an iPhone to explore apps, play games, learn something new, or connect with friends. Studies show that 71 percent of users with a disability will immediately leave a website that doesn't accommodate them, and that's just one thing that iOS 14 fixes. But there's something for everyone in this update.

- Don't Miss: 200+ New Features & Changes in iOS 14 for iPhone

1. VoiceOver Is Smarter Overall

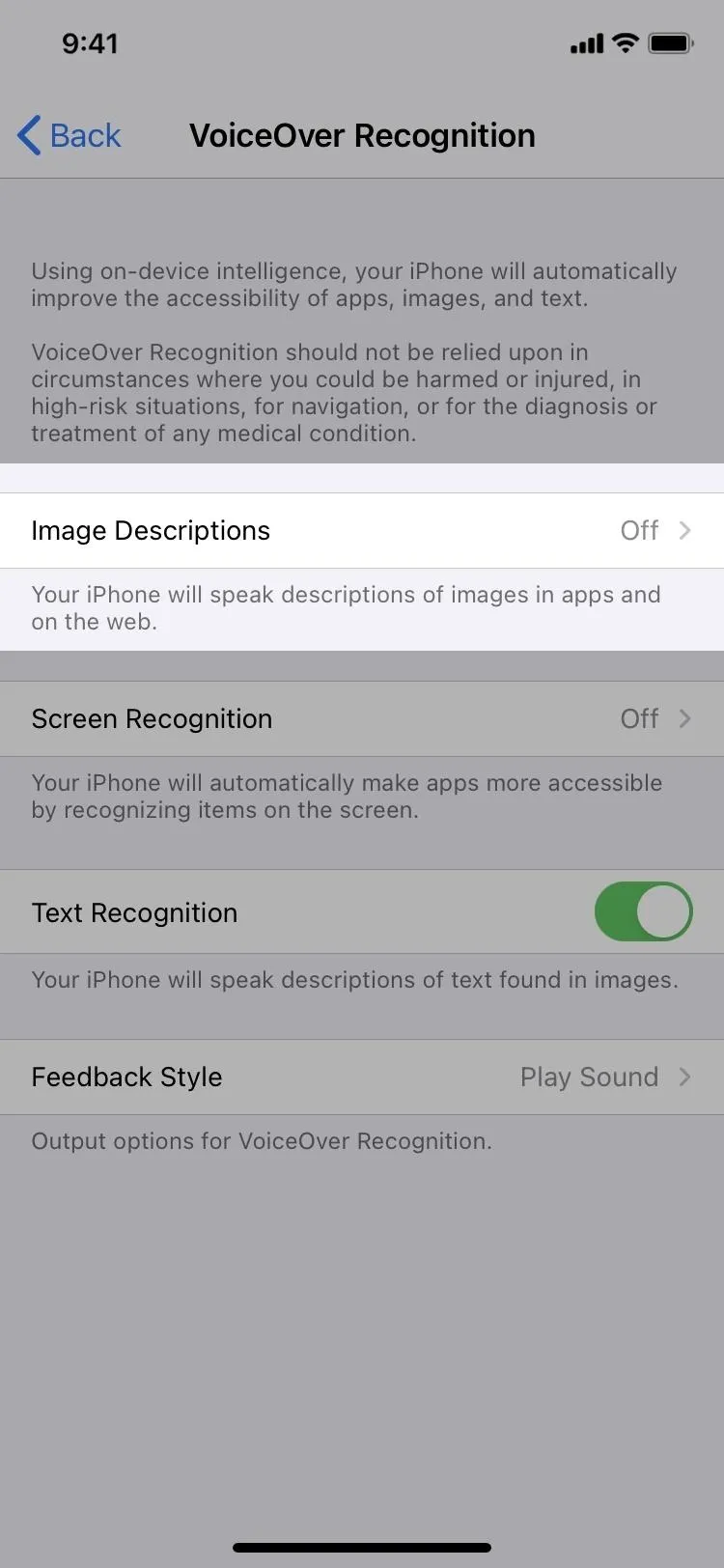

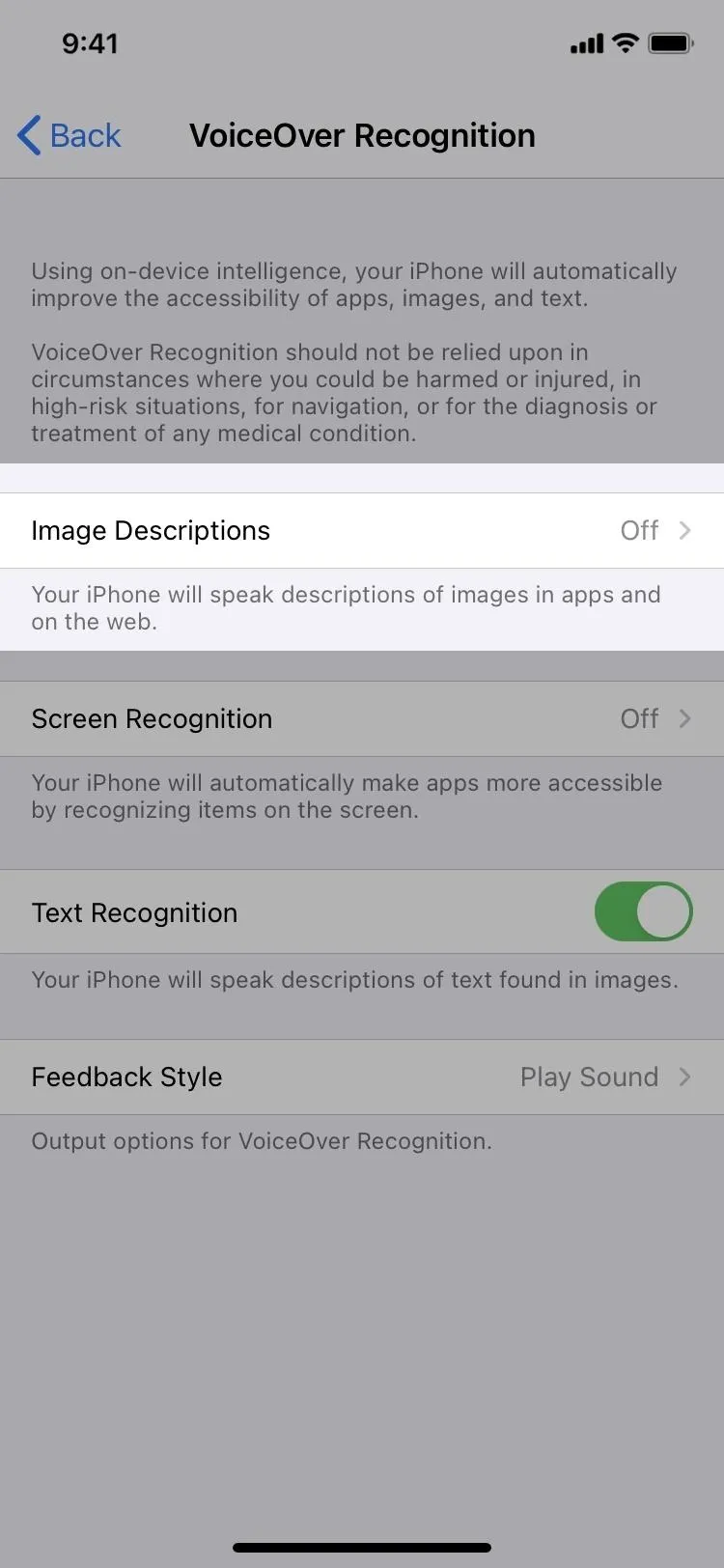

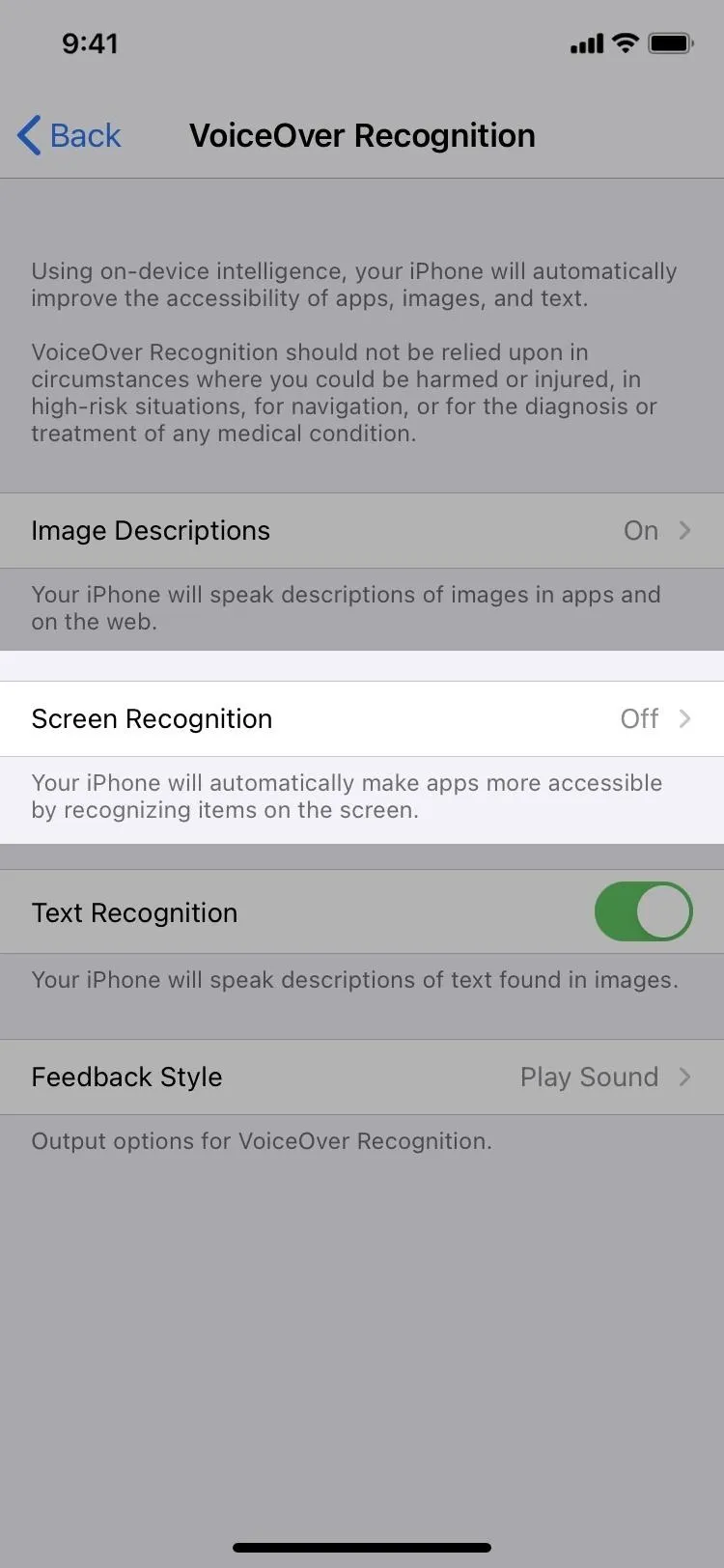

In iOS 13 and below, VoiceOver, the technology for people who are blind or have low vision, which reads aloud things that appear on the screen, is pretty basic and follows the labels that mobile apps and web apps give them. But not every app includes this information, and that's where iOS 14 saves the day.

Now, there's on-device intelligence that determines what things are called and how to say them for when developers don't provide that data. So people who can't see will be able to use everything on their iPhone, not just some of it.

2. And It Can Recognize Descriptions for Images & Photos

VoiceOver can read the descriptions that accompany images and photos in apps. That wouldn't happen before, so now you'll know what's going on in any media you touch, as long as it provides that information.

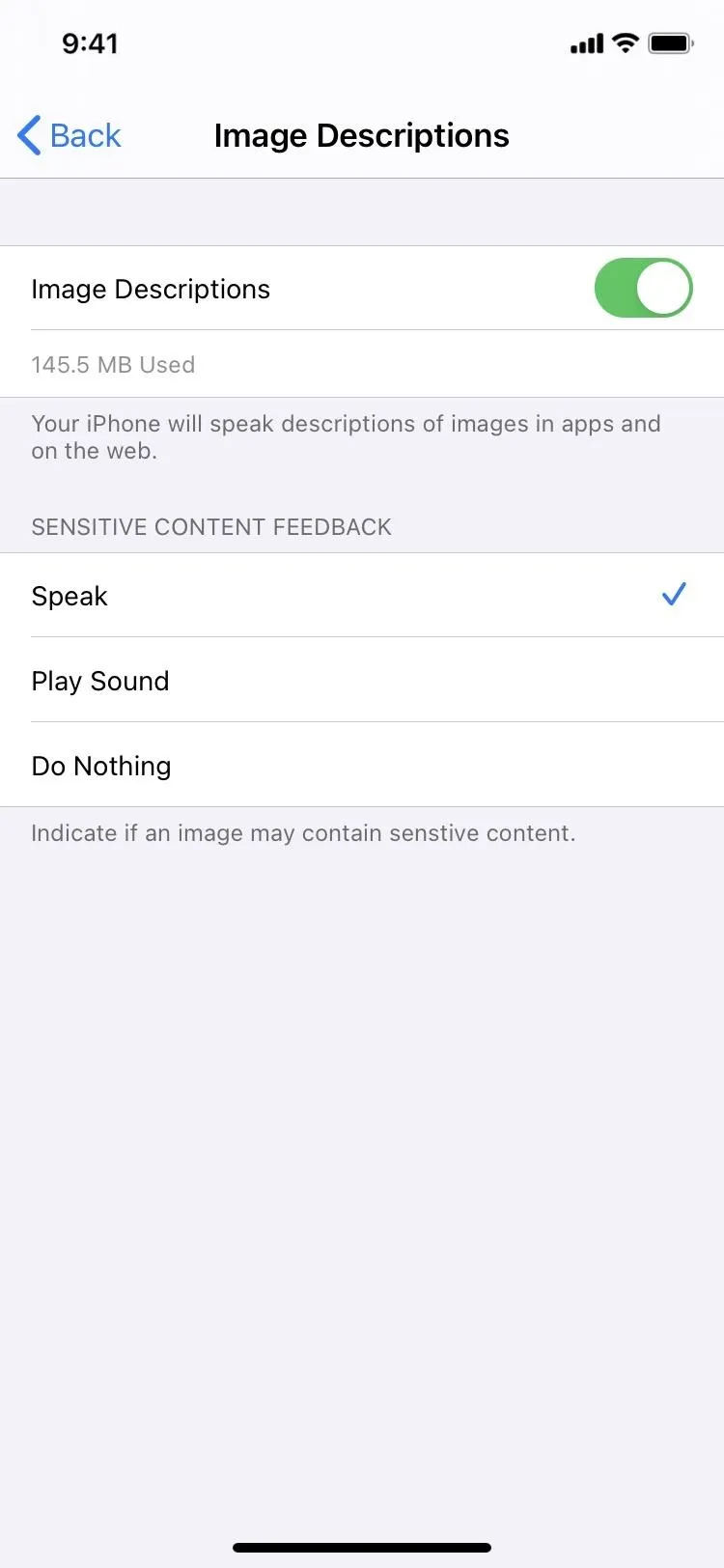

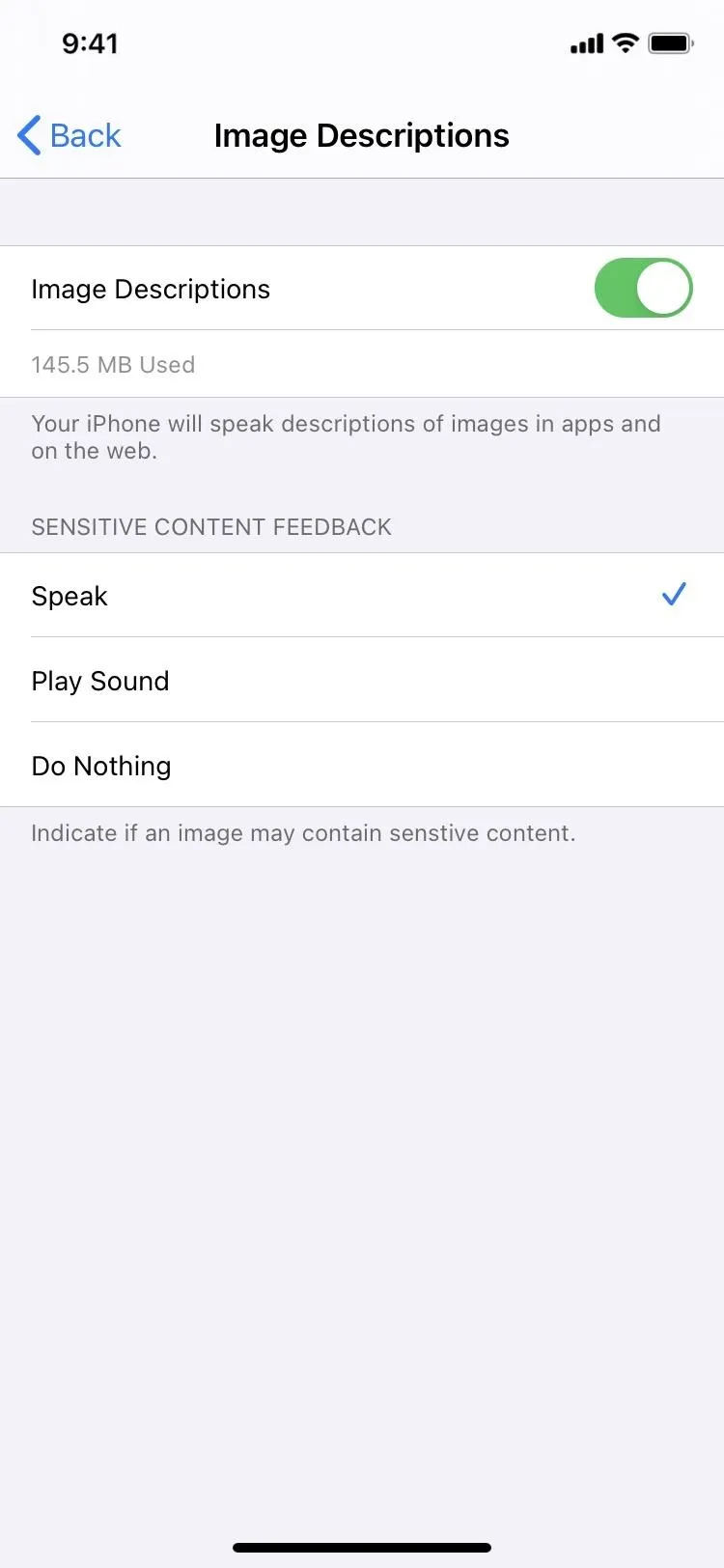

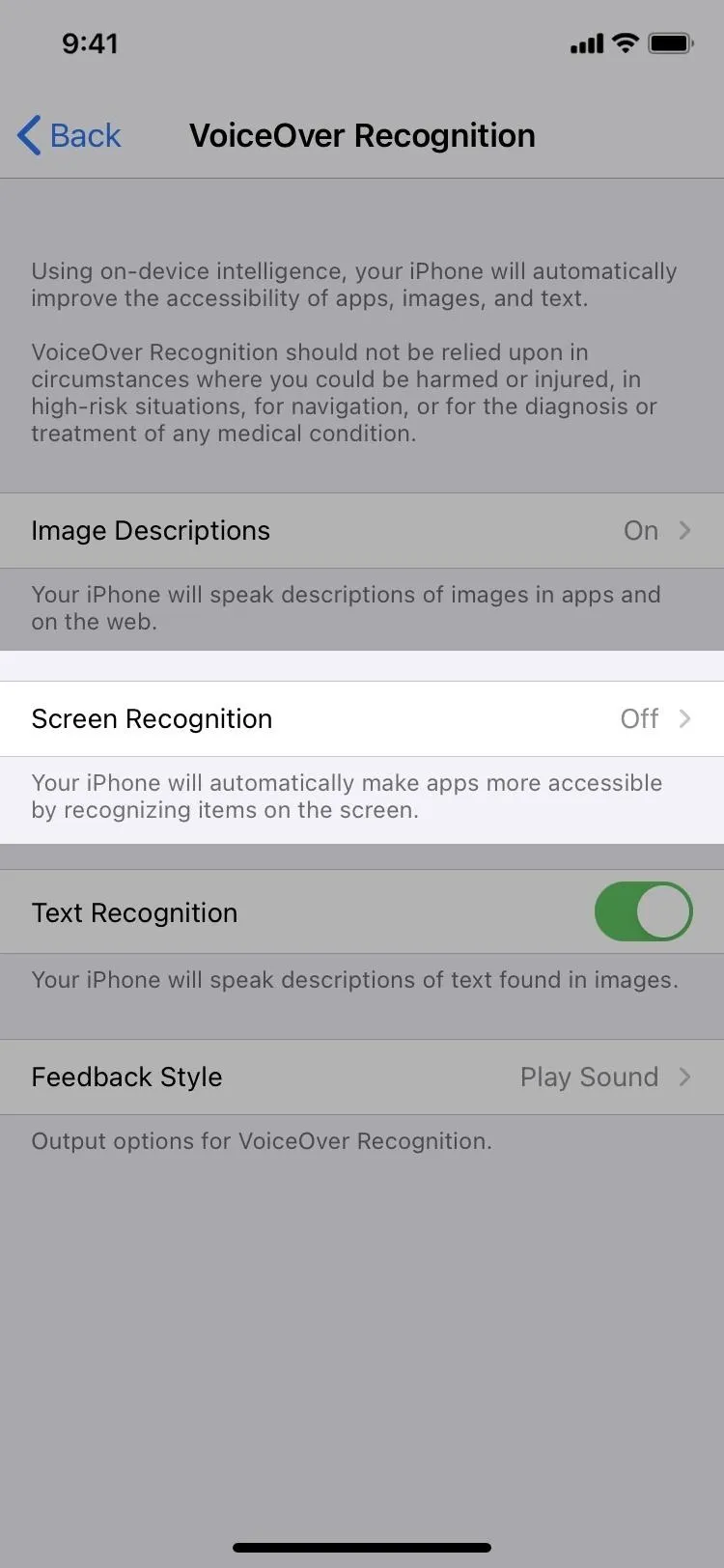

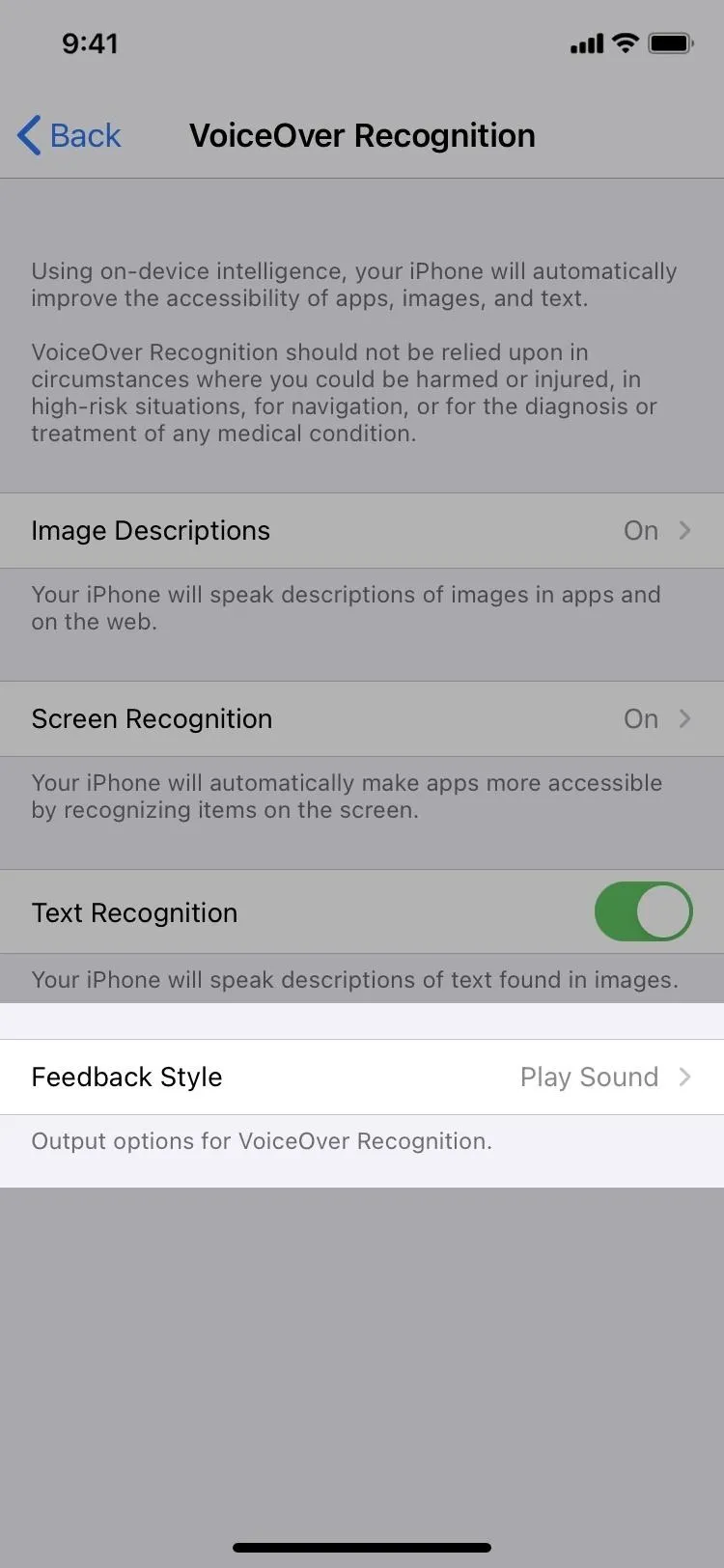

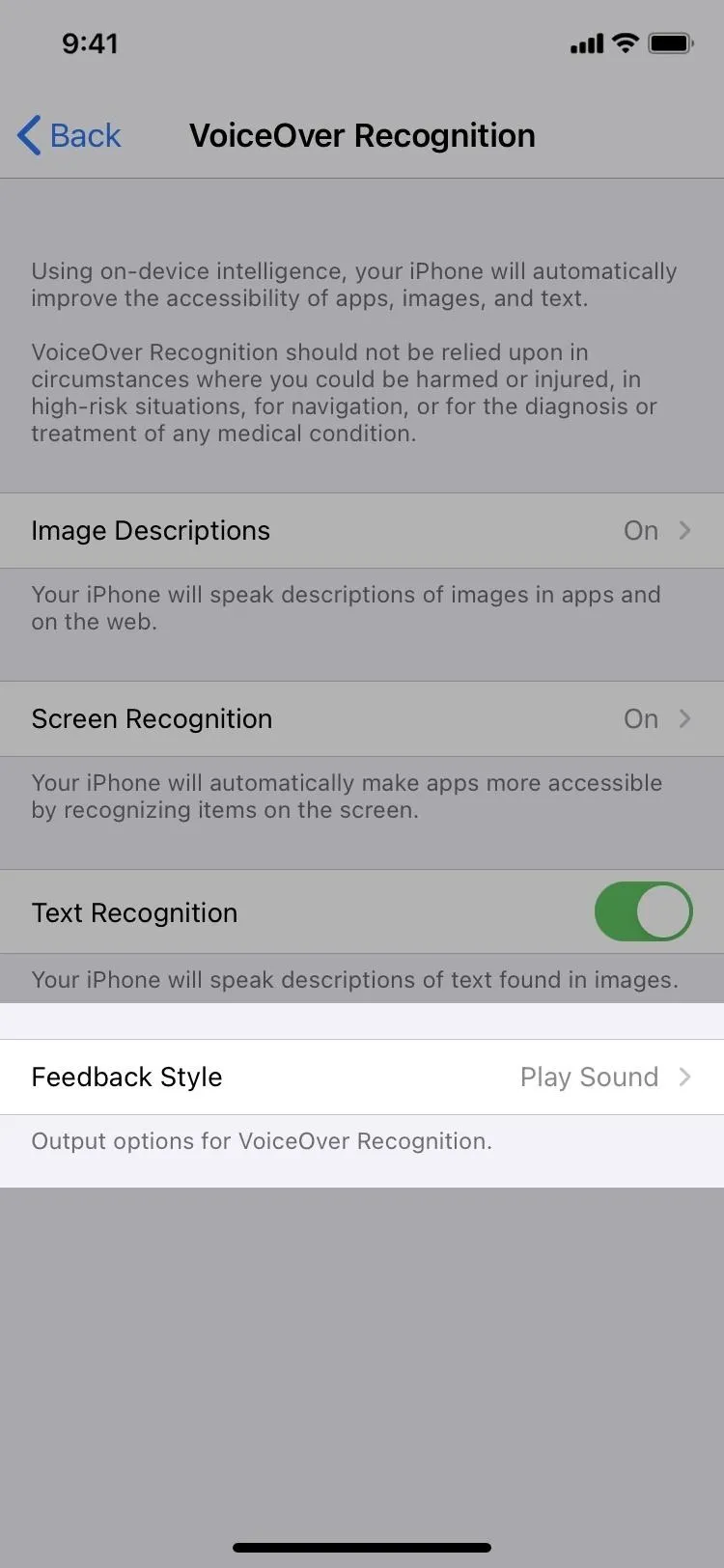

It's opt-in and can be turned on via "Image Descriptions" in the VoiceOver Recognition settings. If the image's text describes something that's overly graphic, it will tell you, but you can also just make it play a sound or do nothing instead.

3. And Text Within Images & Photos

Another significant aspect of VoiceOver's new abilities is that it can read any text found within images and photos. So if the text is actually part of the image or photo, VoiceOver will recognize it. When VoiceOver Recognition is turned on, "Text Recognition" is enabled by default.

4. And Interface Controls in Apps

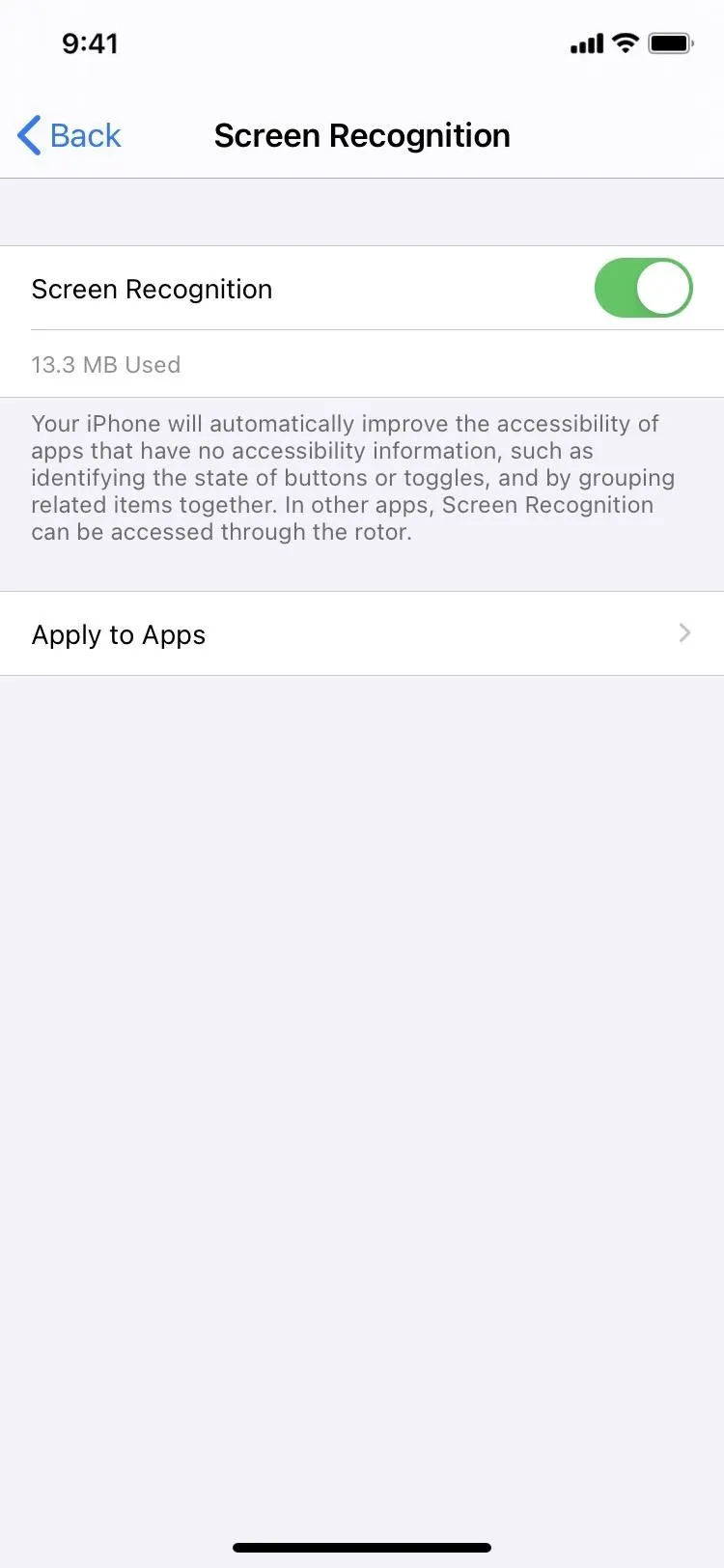

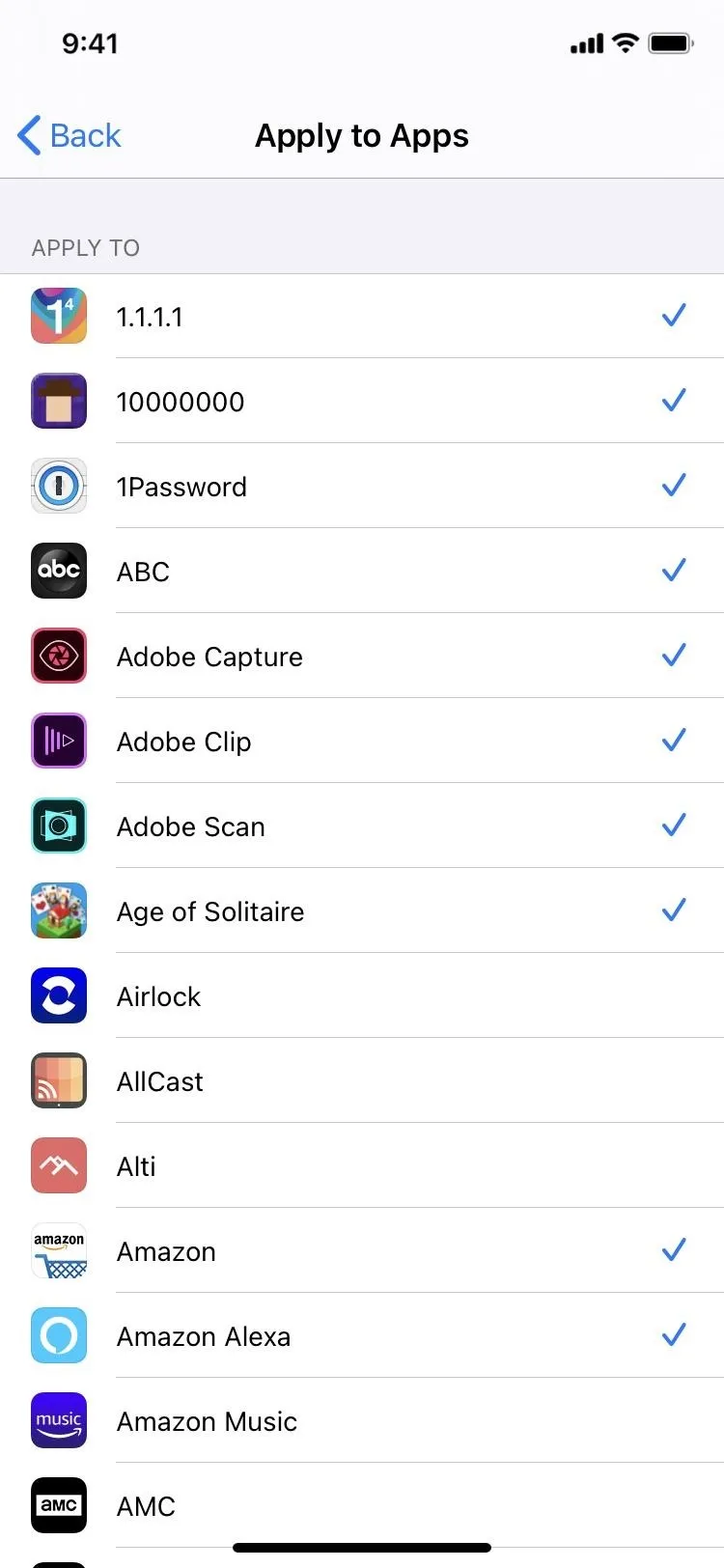

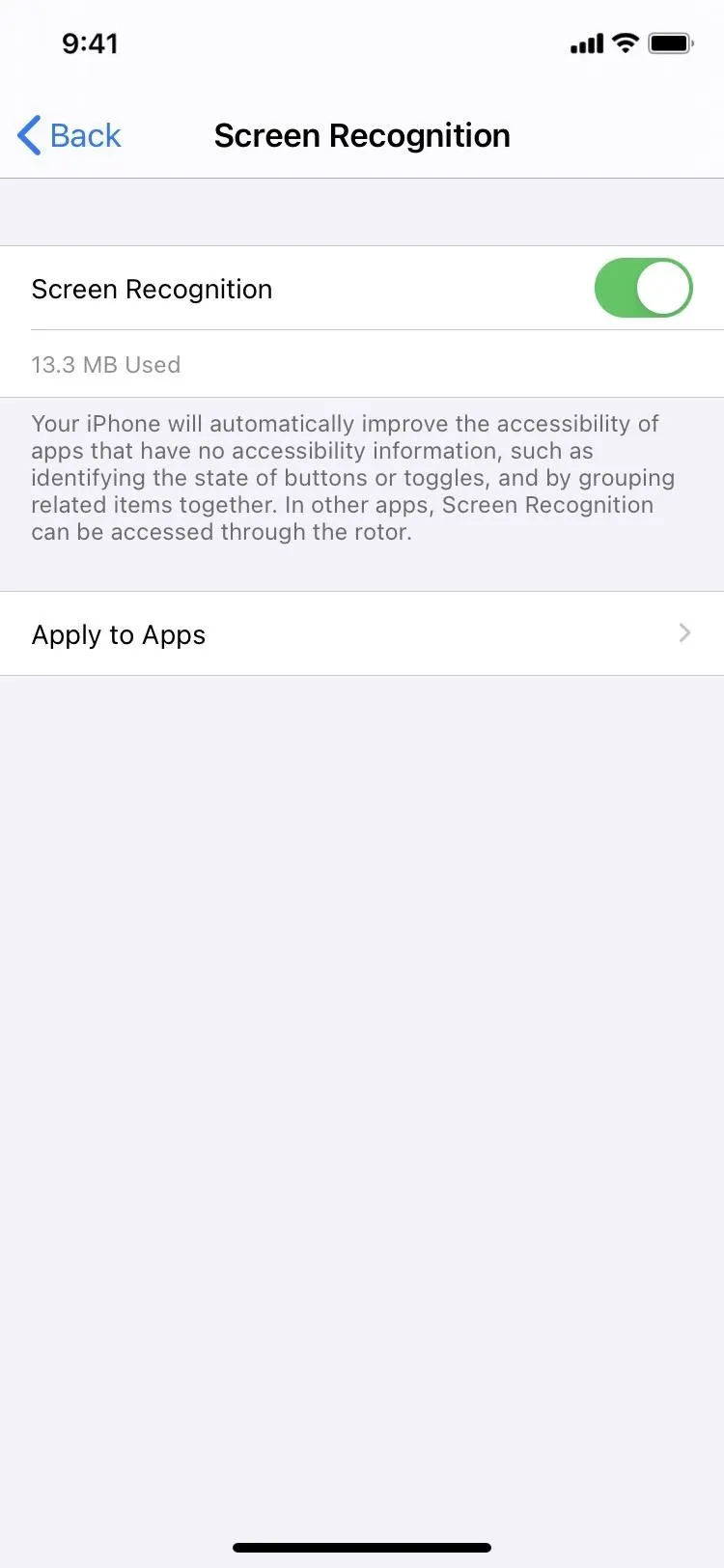

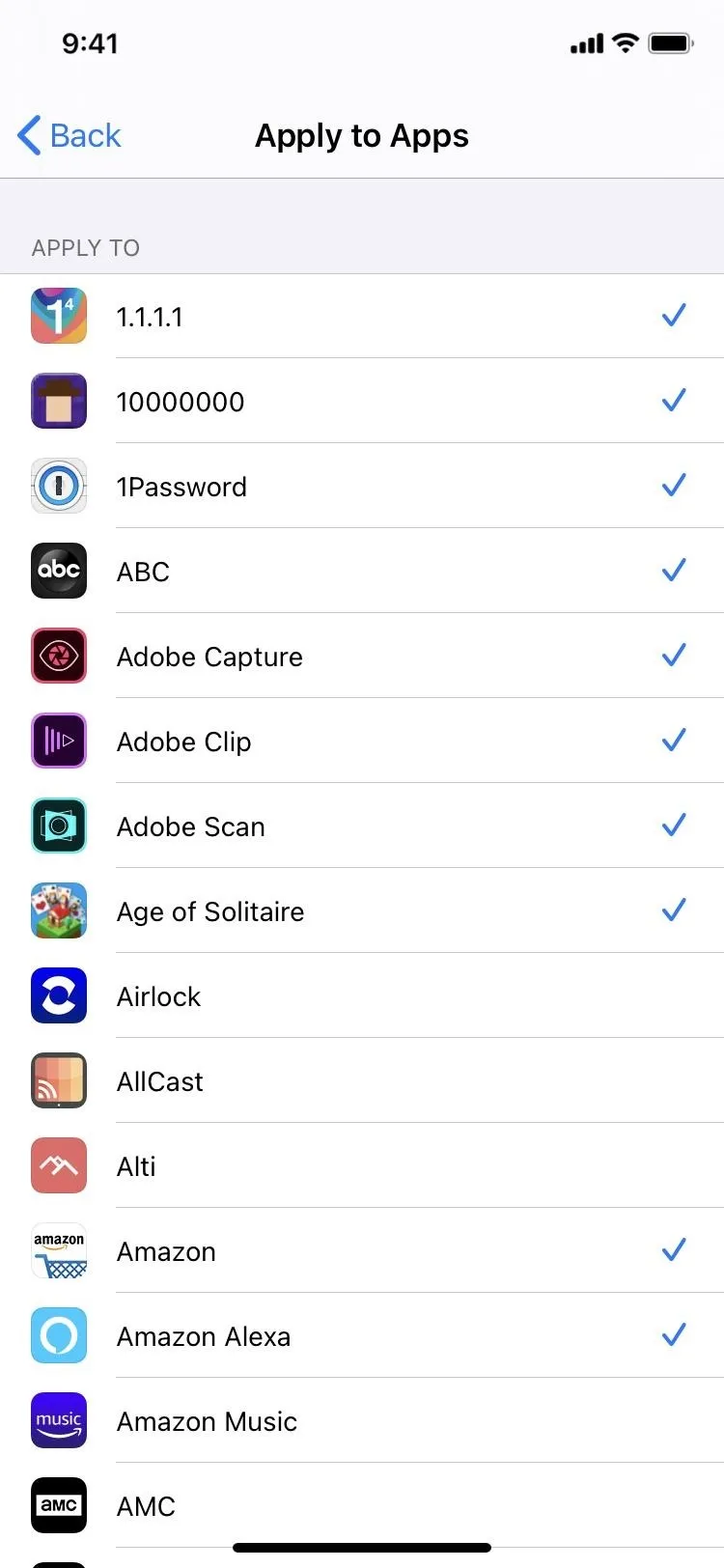

VoiceOver can intelligently describe on-screen interface controls when it detects them. This makes it easier to navigate apps, therefore making them more accessible. It's not on by default, but you can enable it in the "Screen Recognition" settings for VoiceOver Recognition. Then, you can choose which apps you want it to work with.

Your iPhone will automatically improve the accessibility of apps that have no accessibility information, such as identifying the state of buttons or toggles, and by grouping related items together. In other apps, Screen Recognition can be accessed through the rotor.

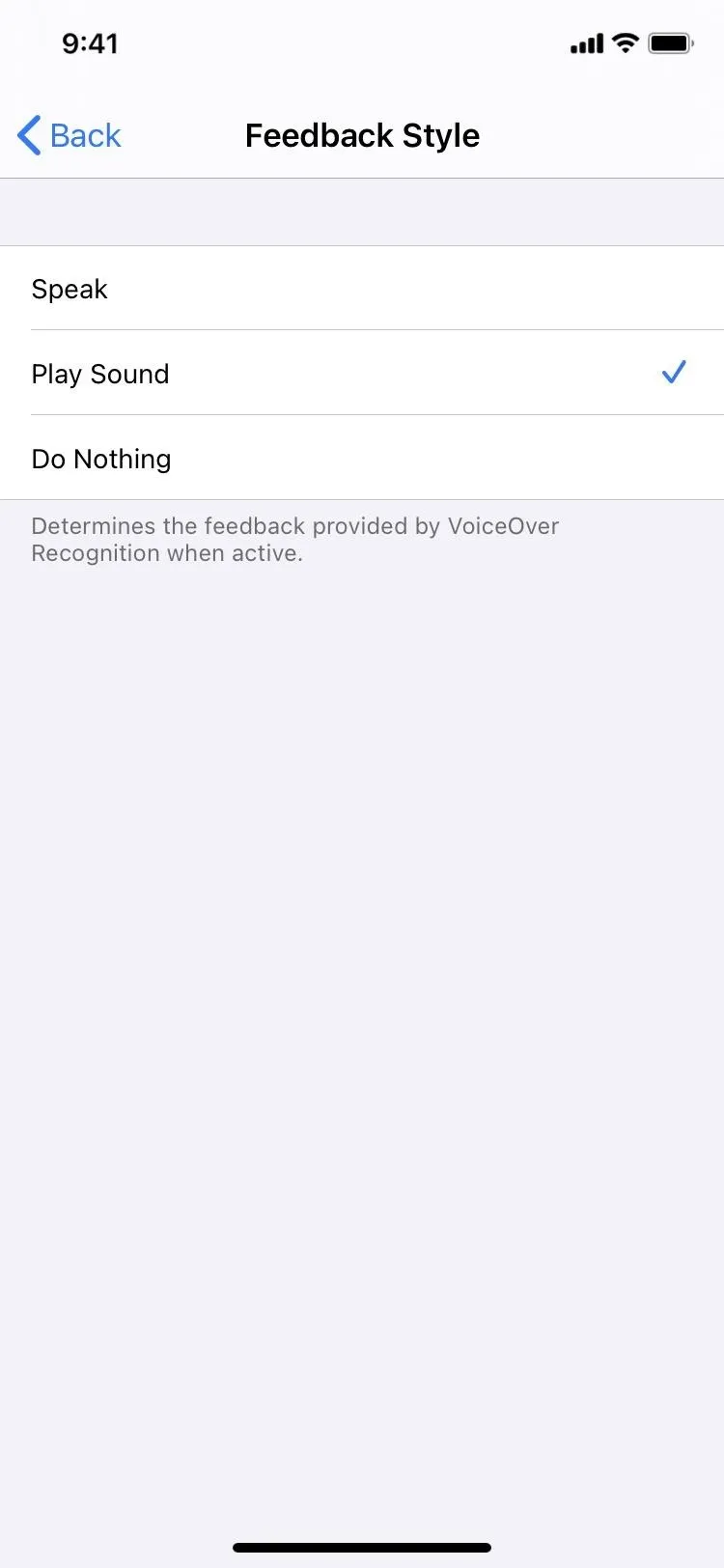

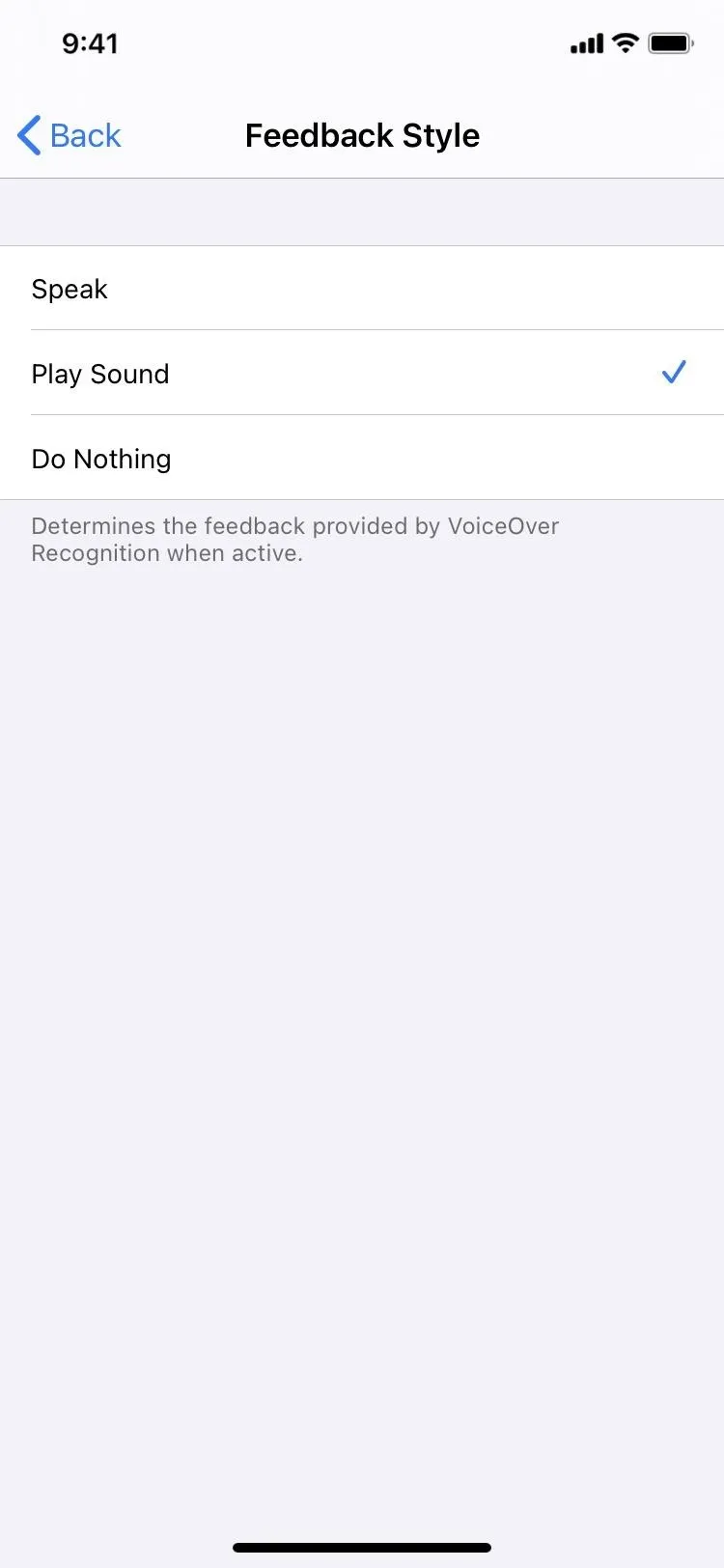

5. VoiceOver Tells You When VoiceOver Recognition Is Used

The last new feature for VoiceOver is "Feedback Style," which is enabled by default in the VoiceOver Recognition settings. It plays a sound whenever Recognition is active, but you can change it to speaking or doing nothing.

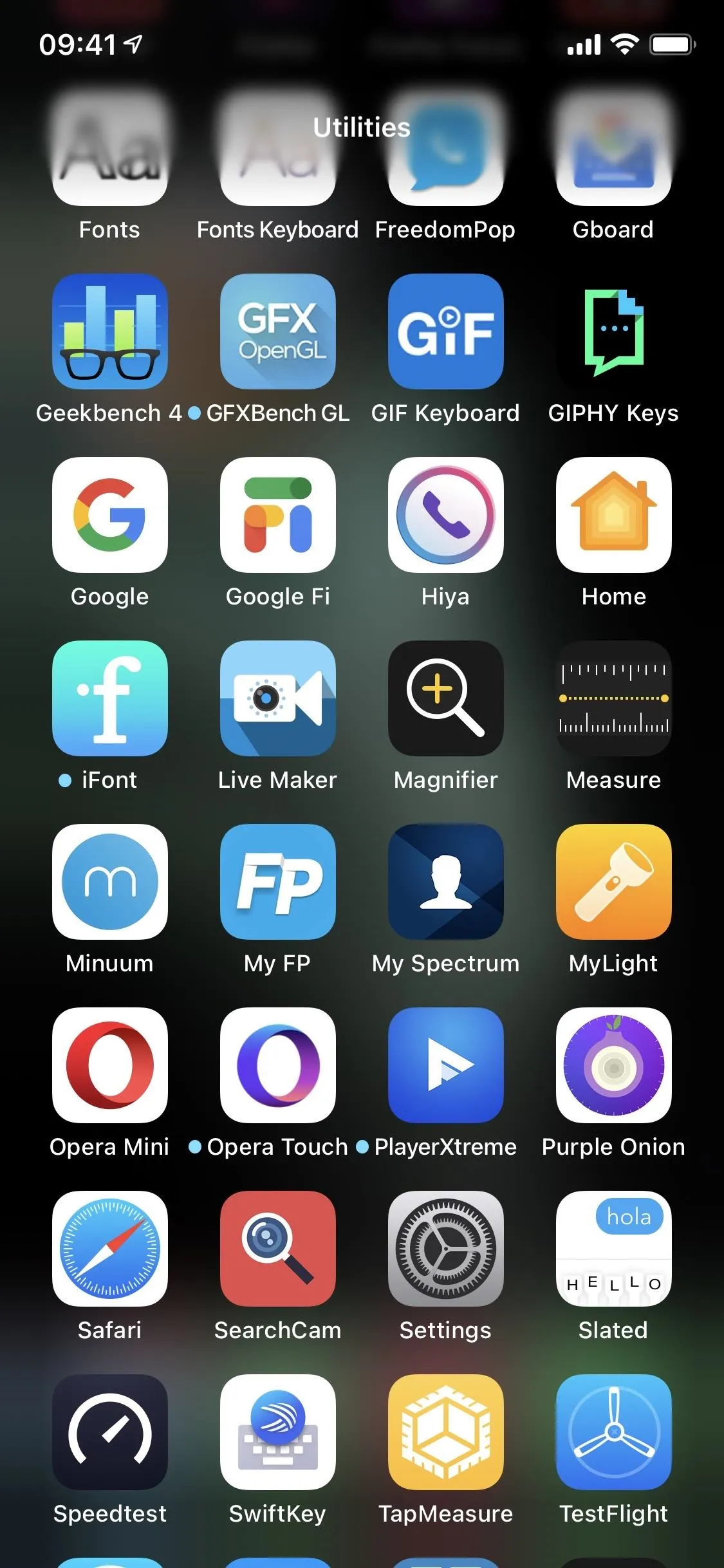

6. Magnifier Has an App Icon Now

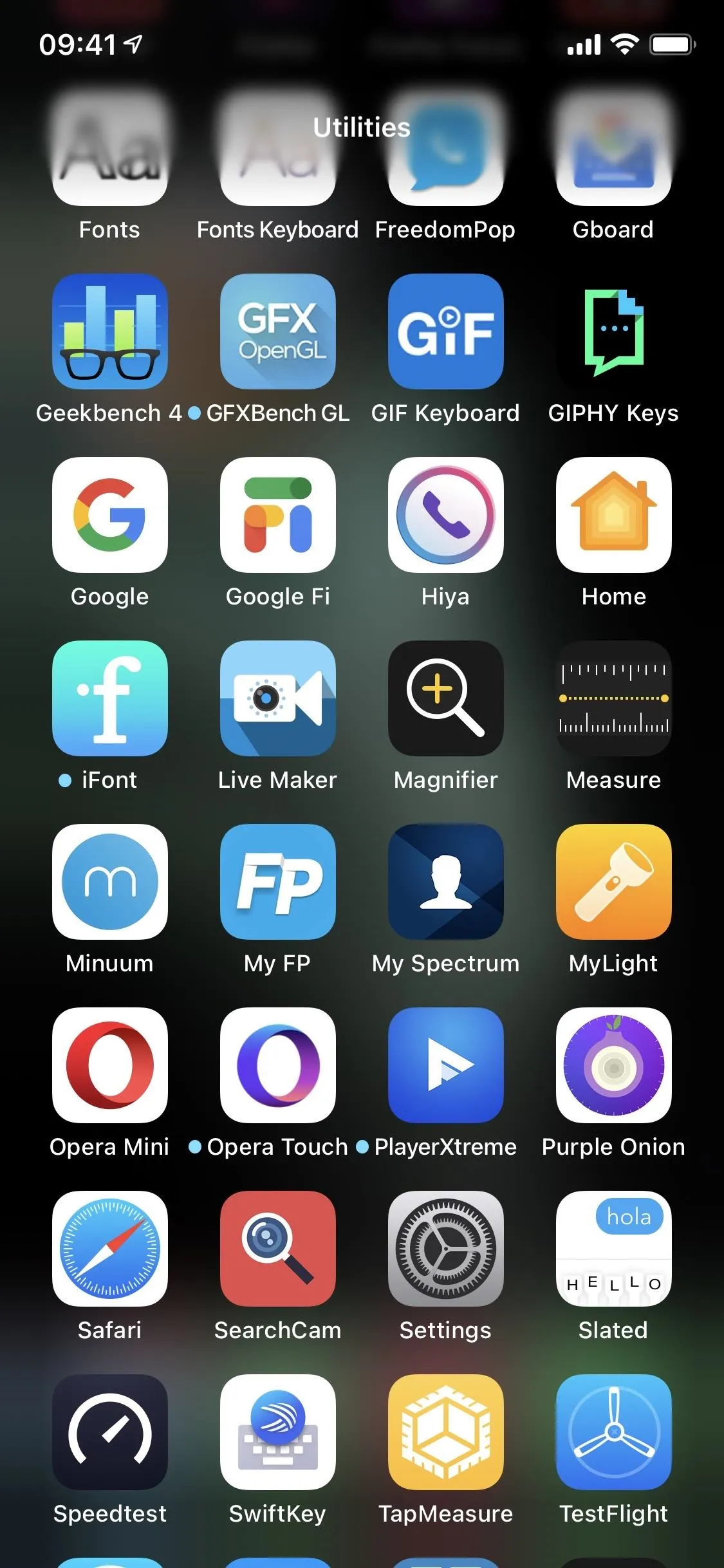

The Magnifier tool, which turns your camera into a magnifying glass, has been around since iOS 10, but it's gone unnoticed by most because it's relatively well hidden. That changes in iOS 14 since there's now an option to add it to your home screen, App Library, and Search.

To find it, you must have Magnifier turned on via Settings –> Accessibility –> Magnifier. Then, you can locate it the "Utilities" or "Recently Added" folders in the App Library. From there, you can drag it out onto the home screen to create a home screen shortcut. That way, you don't have to worry about triple-clicking anything anymore. You can also search for it via the Search tool. But if you ever disable Magnifier in the Settings, the app icons will disappear everywhere.

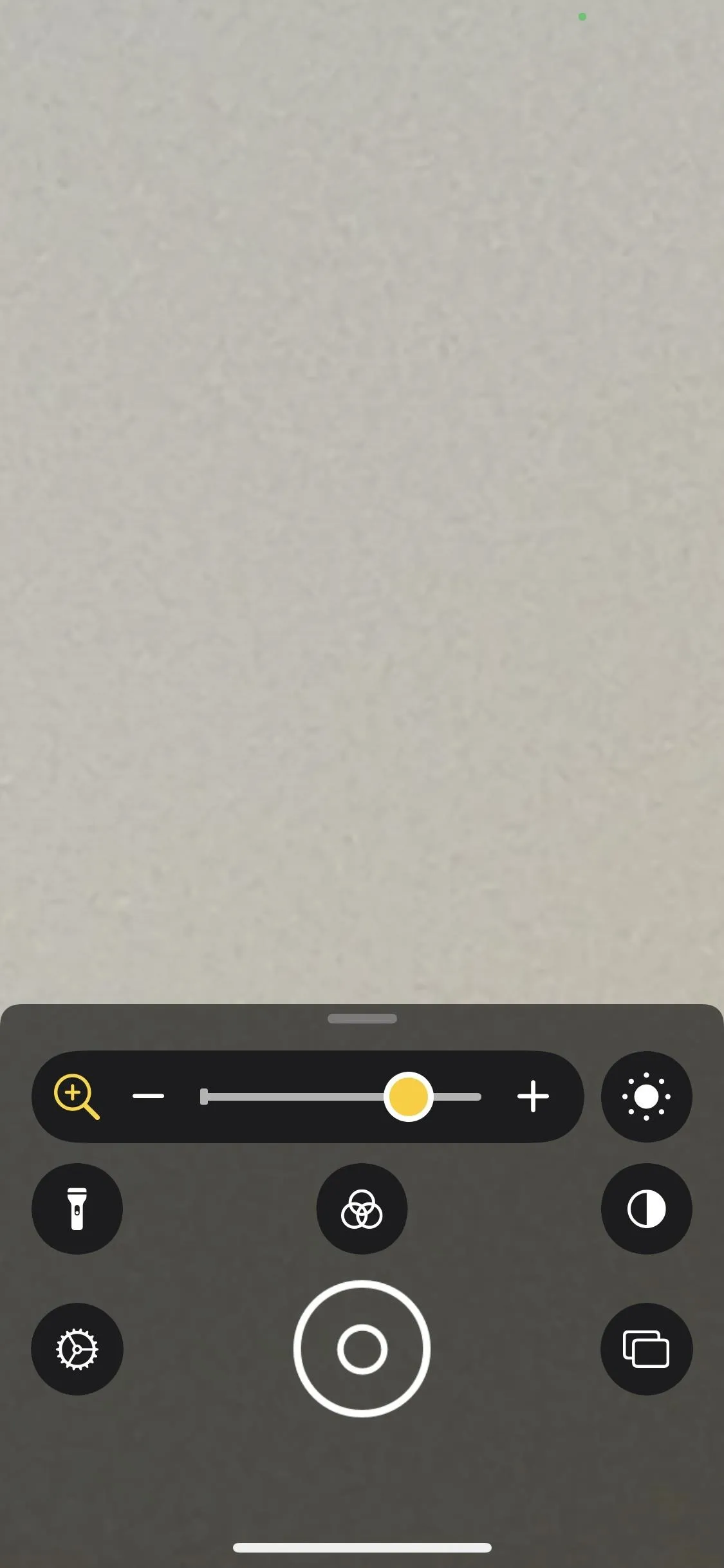

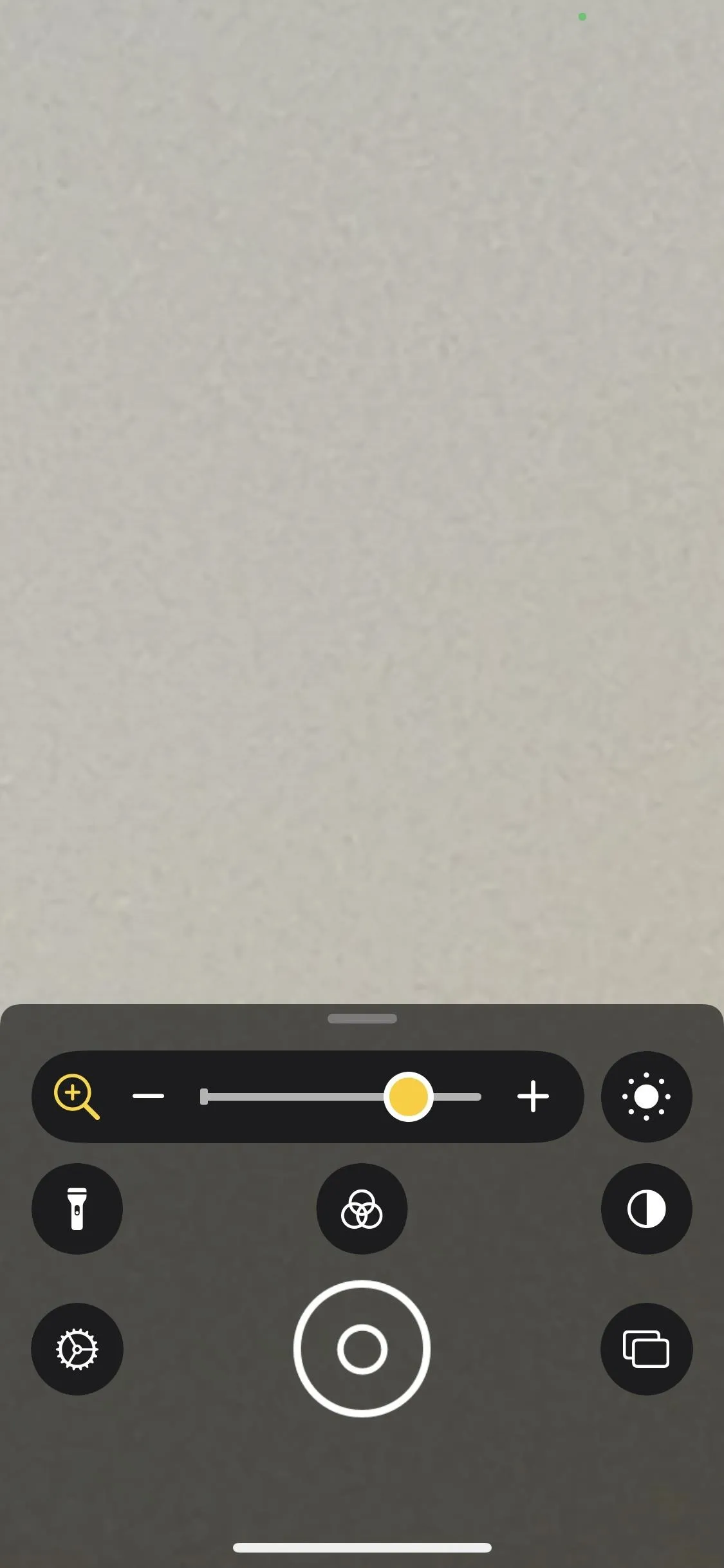

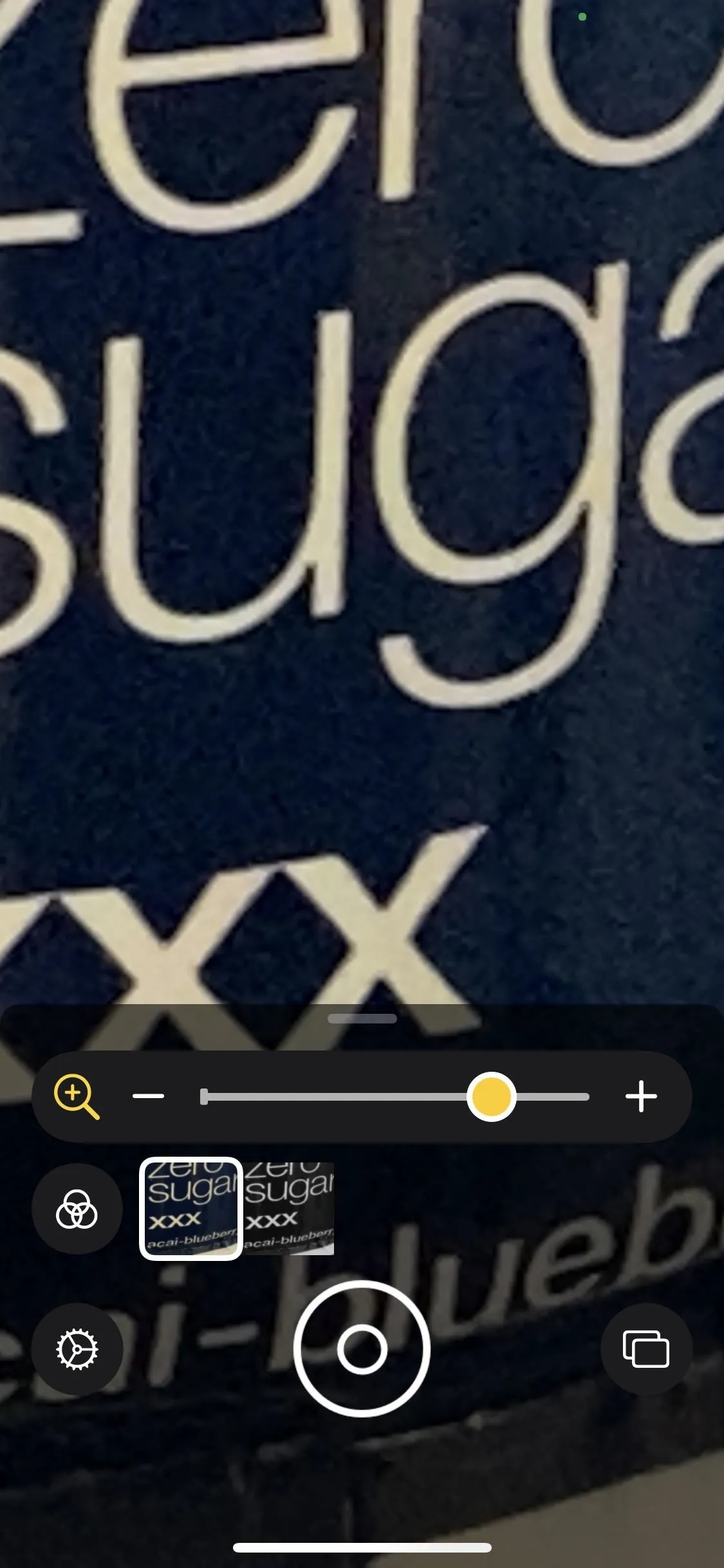

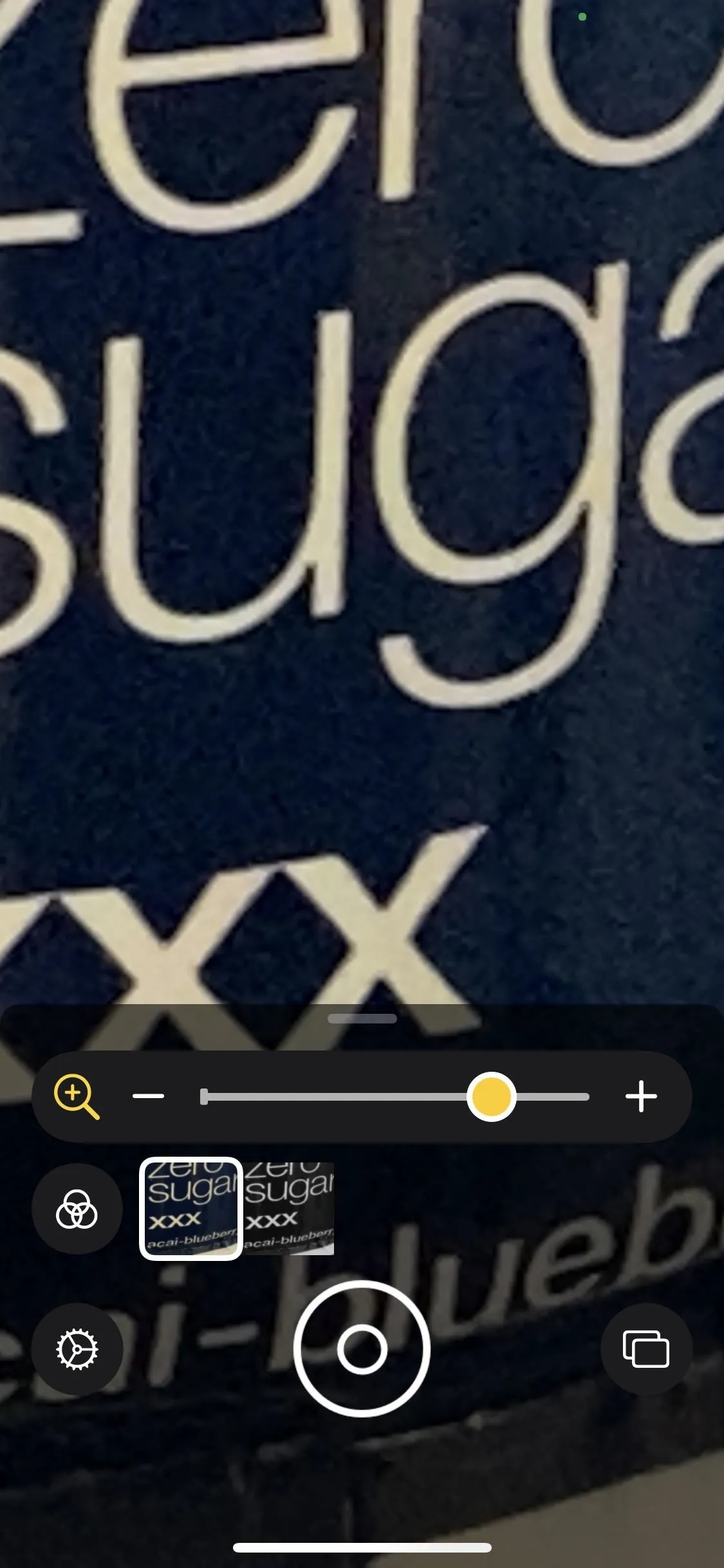

7. And Its Controls Can Be Hidden

Before, whenever Magnifier was opened, the onscreen controls would stay visible at all times unless you took a picture. Now, in iOS 14, you can swipe the control panel down to see and use just the zoom slider, and you can double-tap on the screen to hide the whole thing (and double-tap again to show it).

8. Magnifier Has a New Interface

The controls before were pretty basic. The slider would control zooming, the lightning bolt would turn on the flash, the lock icon would lock the exposure, the filter button would give access to colored filters and contrast/brightness levels, and the shutter would take a still image.

Now, the zoom slider looks a little better, the contrast/brightness levels are upfront and center instead of hidden in filters, and the lightning bolt is now an actual flashlight.

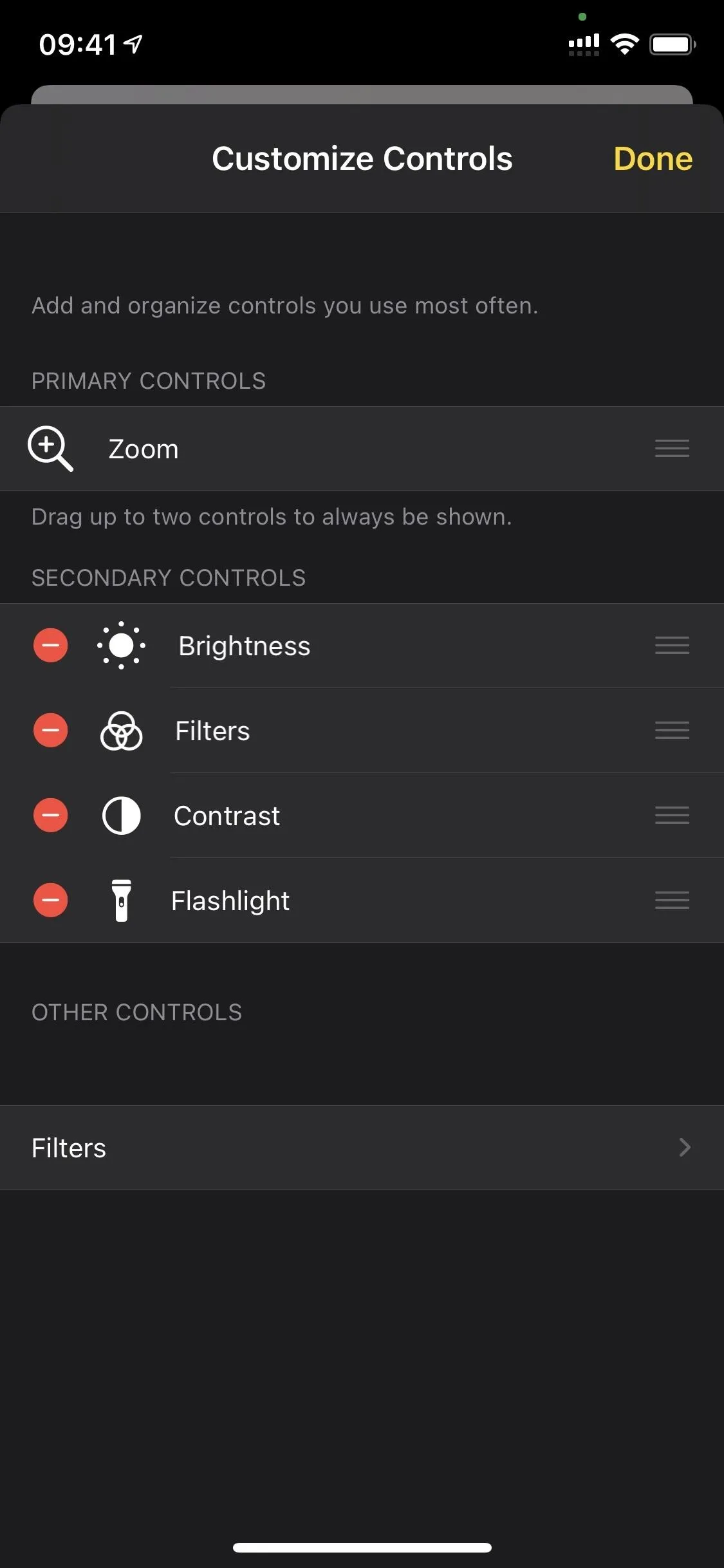

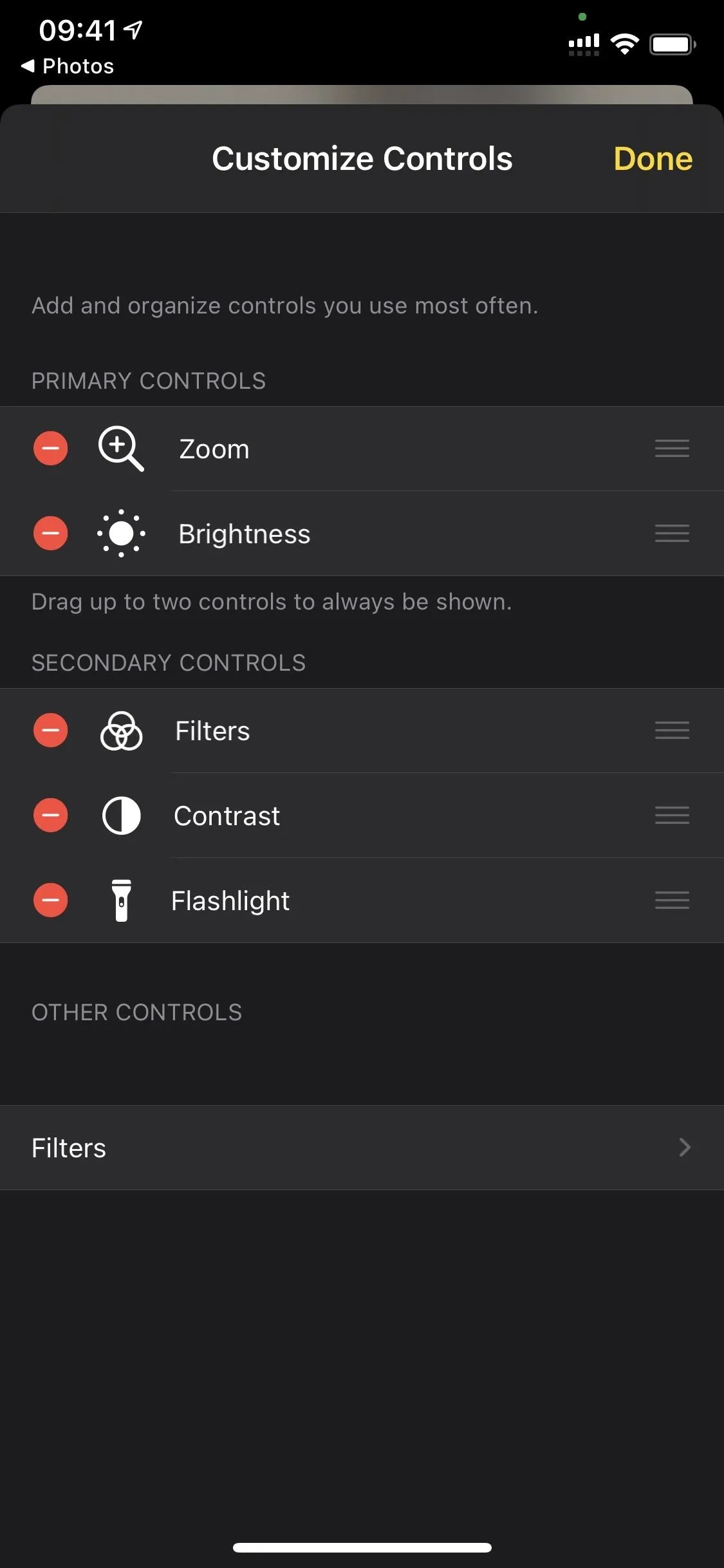

9. Which Can Be Customized

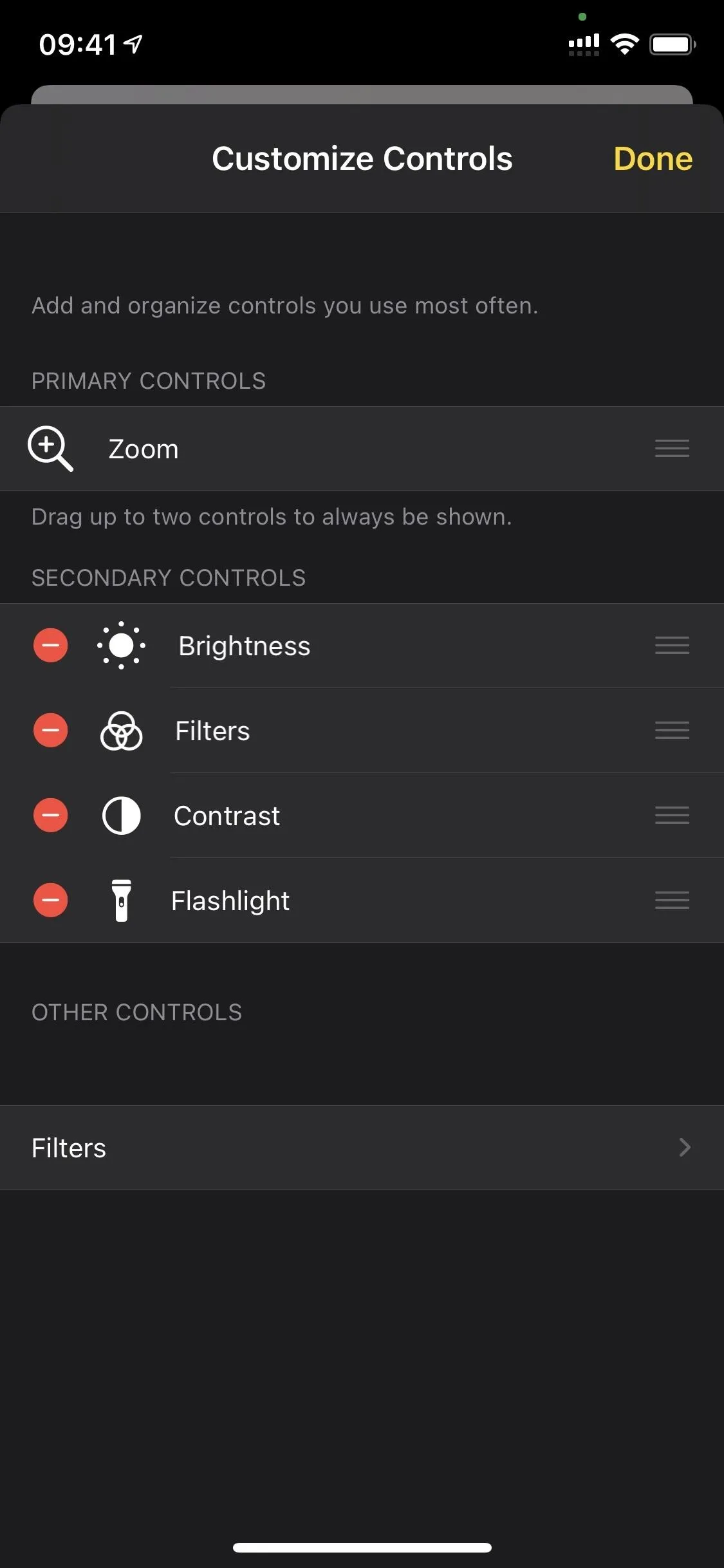

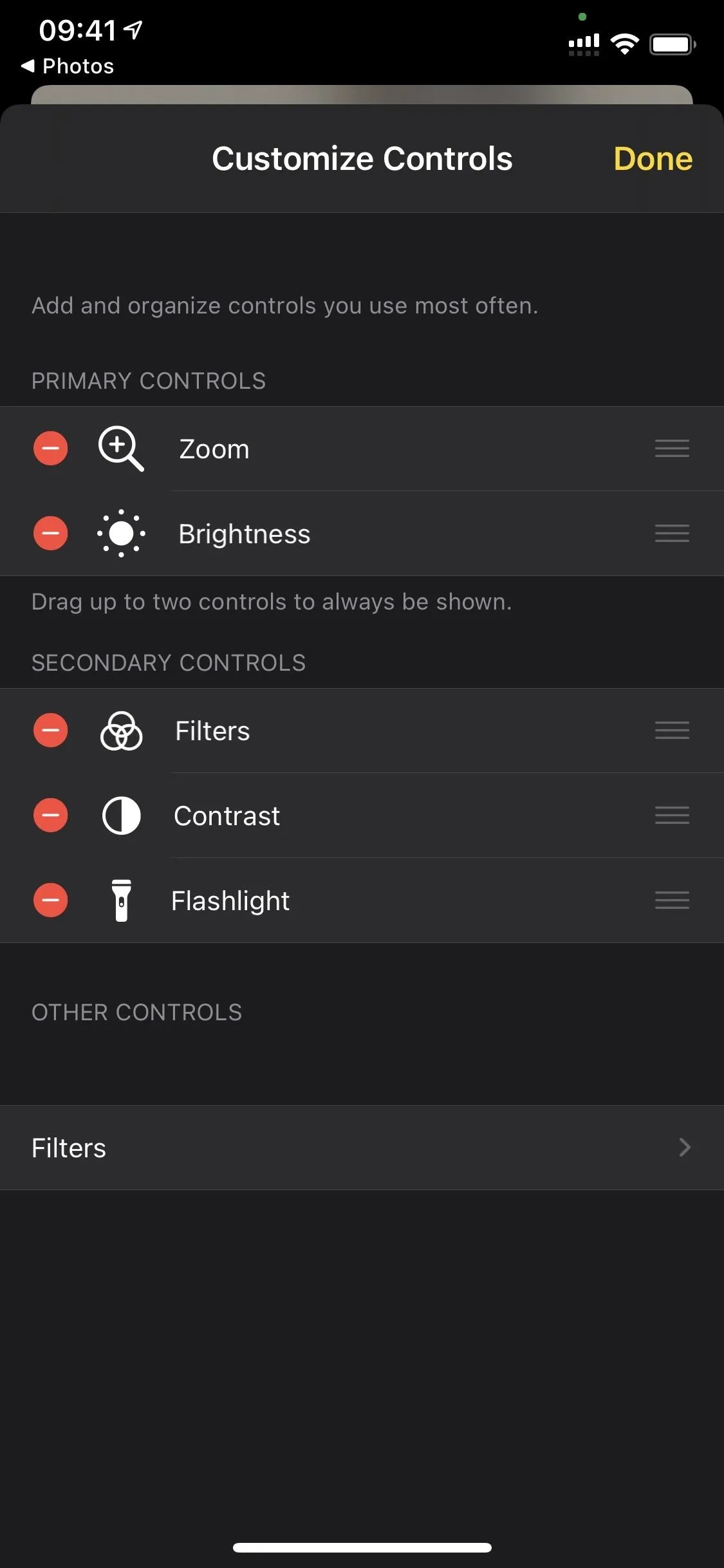

Also new to the controls is the cog button, which takes you to Magnifier's control options. Here, you can change which control is always visible at the top as the primary control and when the controls are minimized. The default is zoom, but it could be brightness, contrast, filters, or the torch. There has to be one, and you max out at two. You can also reorganize the secondary controls or remove any of them.

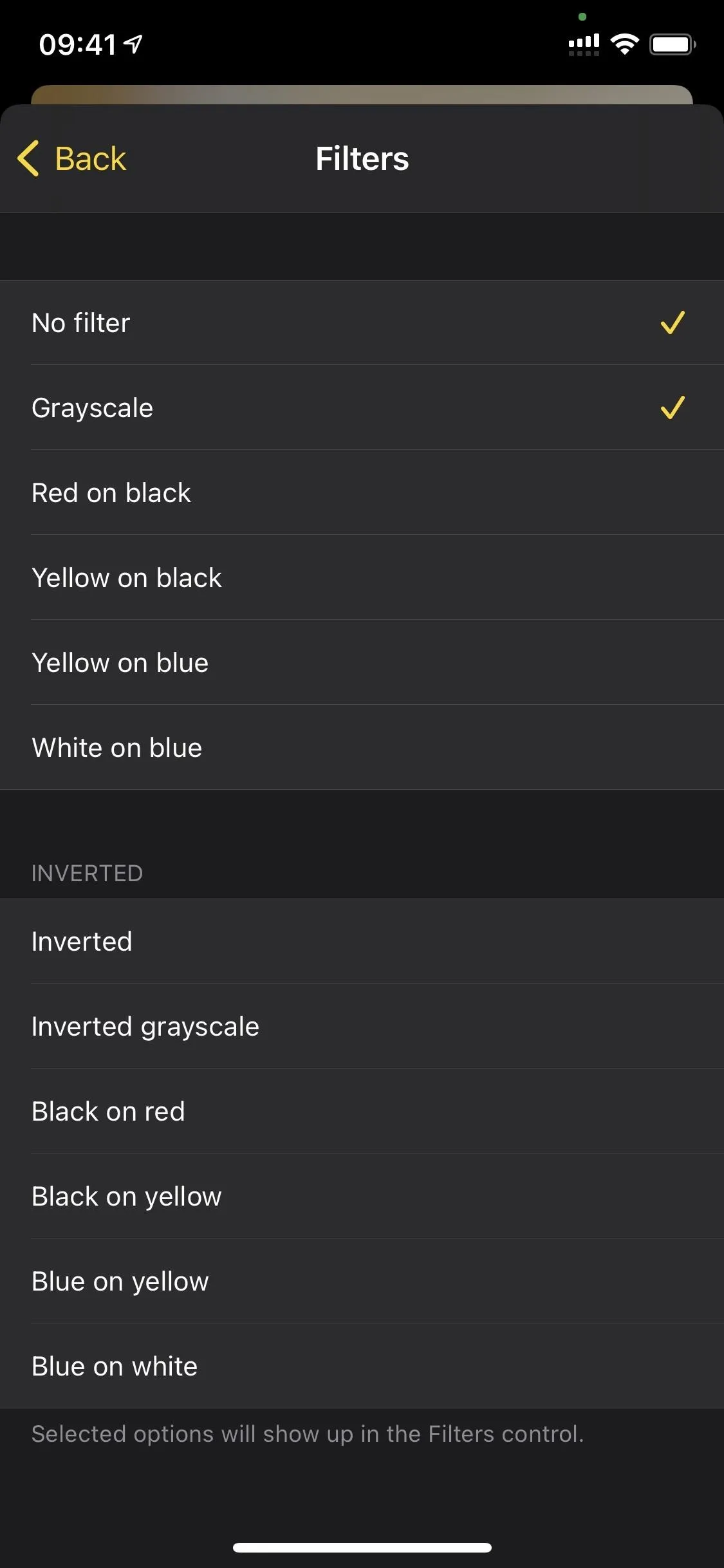

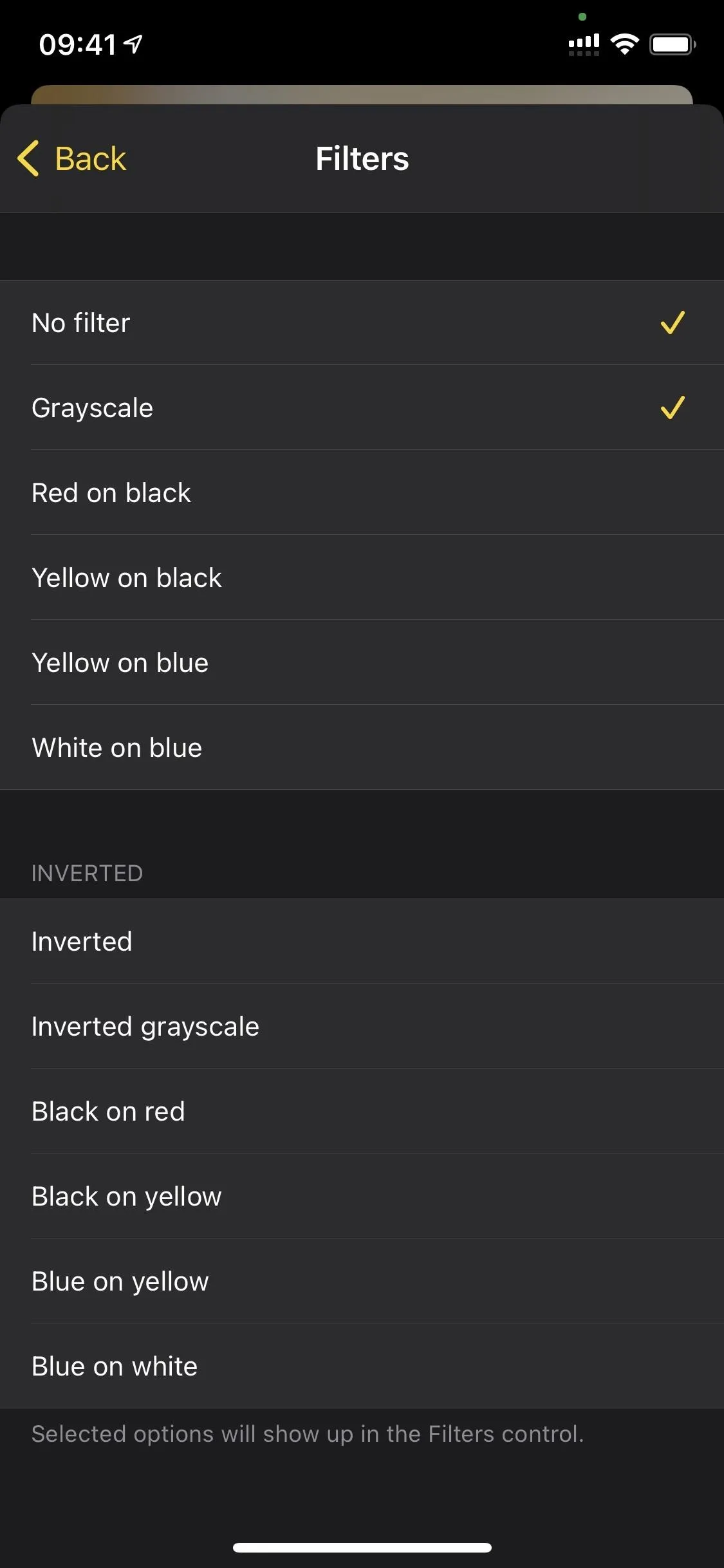

10. Magnifier's Filters Can Be Slimmed Down

Before, after you would tap the filter button, you'd have to swipe through every filter until you found the one that you needed. That's still the case in iOS 14, but when you tap on the cog icon, then "Filter Customization," you can uncheck any filters that you don't use. That way, there's less to swipe through.

11. And You Can Shoot Multiple Images

In previous versions, when you tapped the shutter button, it would create a still image of the view that you could further interact with. Now, you can tap the multi-image button in the controls to temporarily save a picture so you can interact with it later. Then, you can continue using the shutter to take and save more stills. (These stills are not saved to Photos.)

To view older stills, tap "View," then select the image you want. Magnifier will remember these images until you press "End" when looking at all of the stills.

12. Back Tap Gives Two More Shortcuts for Actions You Use the Most

In the prior versions of iOS, you could use the Side or Home button to launch various options in Accessibility. With a triple-click of the button, you could quickly start any feature you enabled. If you liked that, it's still available on iOS 14, but now there is a new way that can do even more.

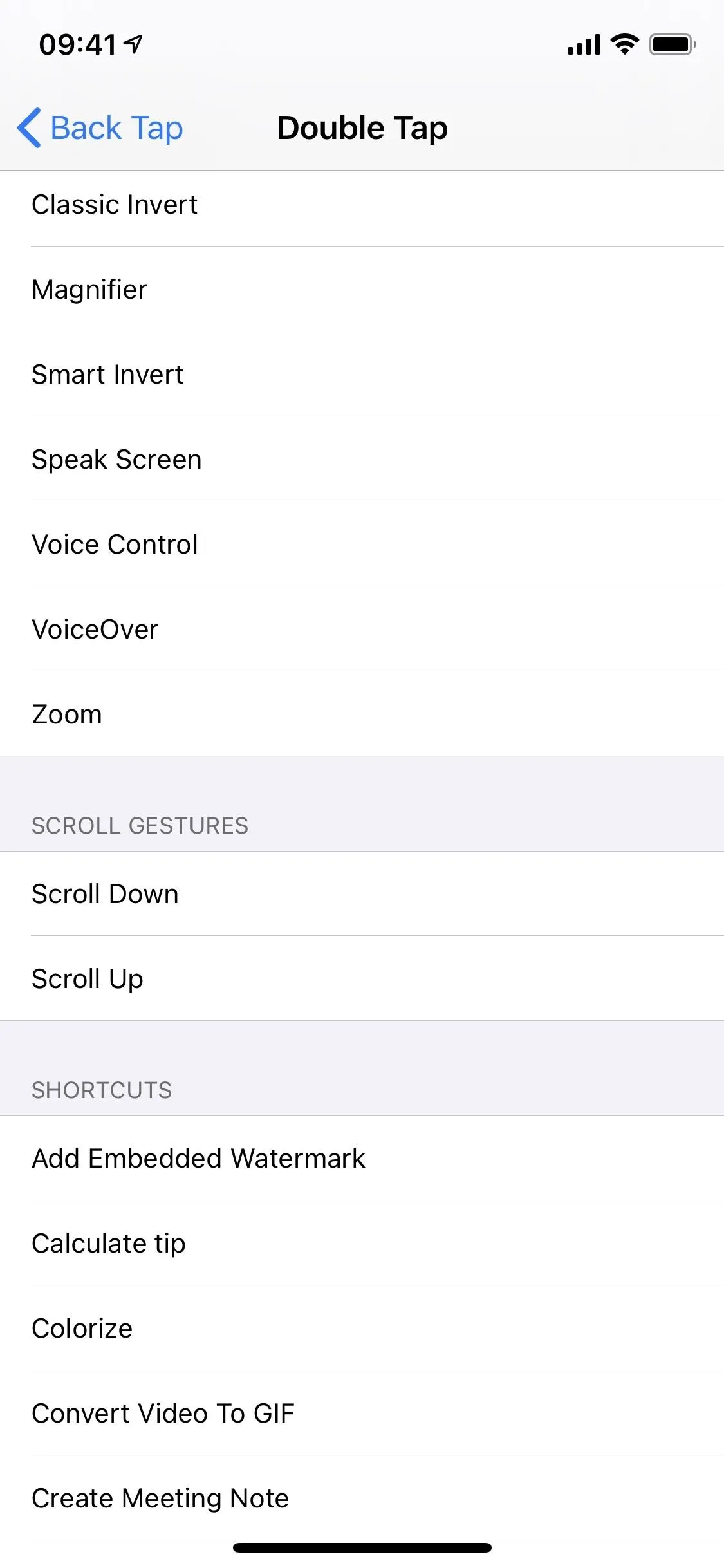

In the "Touch" accessibility menu, there's a new option known as "Back Tap." Choose that, and you'll see options for "Double Tap" and "Triple Tap." These are in reference to tapping the back of your iPhone — not any buttons or on-display controls. In each of those menus, you can select what feature you want to use with each gesture, and the list of what's possible is HUGE. Then, tap twice or thrice on the device's backside in the center to launch the action.

Unlike Accessibility Shortcuts, these gestures allow you to launch more than just accessibility options. You can control volume, sleep your screen, reveal the Notification Center, scroll up or down, and much more. There's even support for shortcuts created with the Shortcuts app. Our favorite, though, is using "Shake," so you no longer have to shake the iPhone to undo text or undelete something, which doesn't always work.

13. Voice Control Has More Languages

Introduced in iOS 13, the new and improved Voice Control allows you to operate your iPhone using only your voice. But in iOS 14, there's a significant improvement, specifically, British English and Indian English voices.

14. And It Works with VoiceOver

What's even better than new voices for Voice Control? The fact that it now works with VoiceOver. So if you've ever wanted to use both features at the same time, now you can.

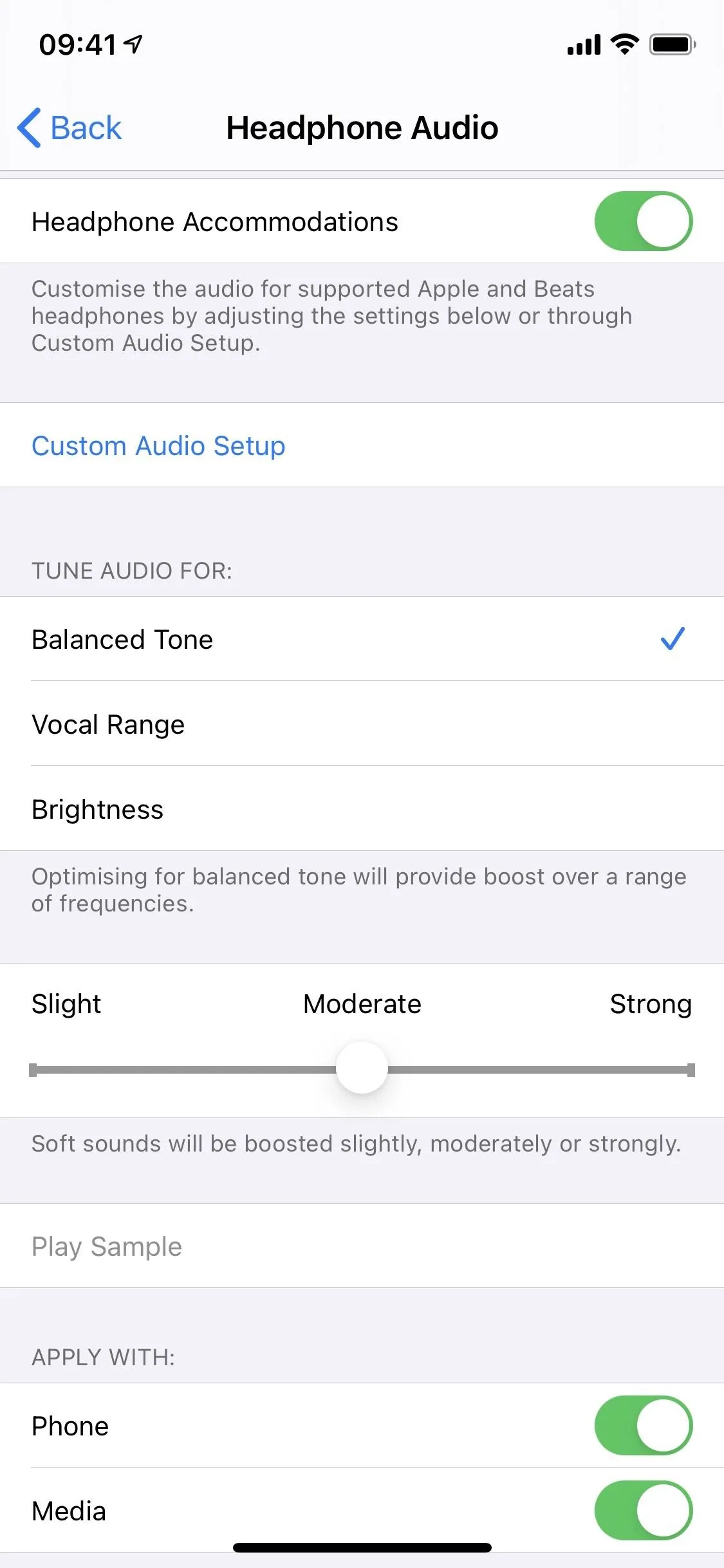

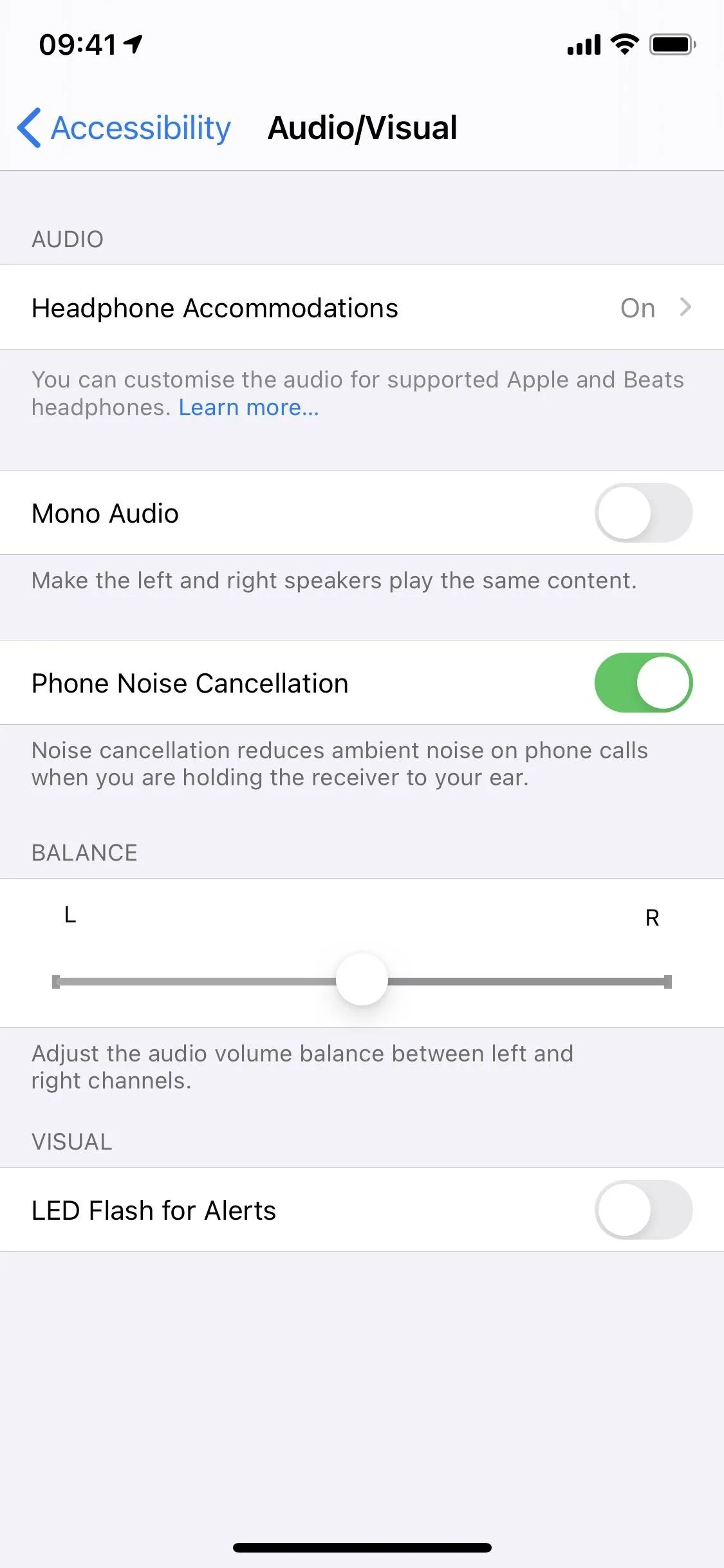

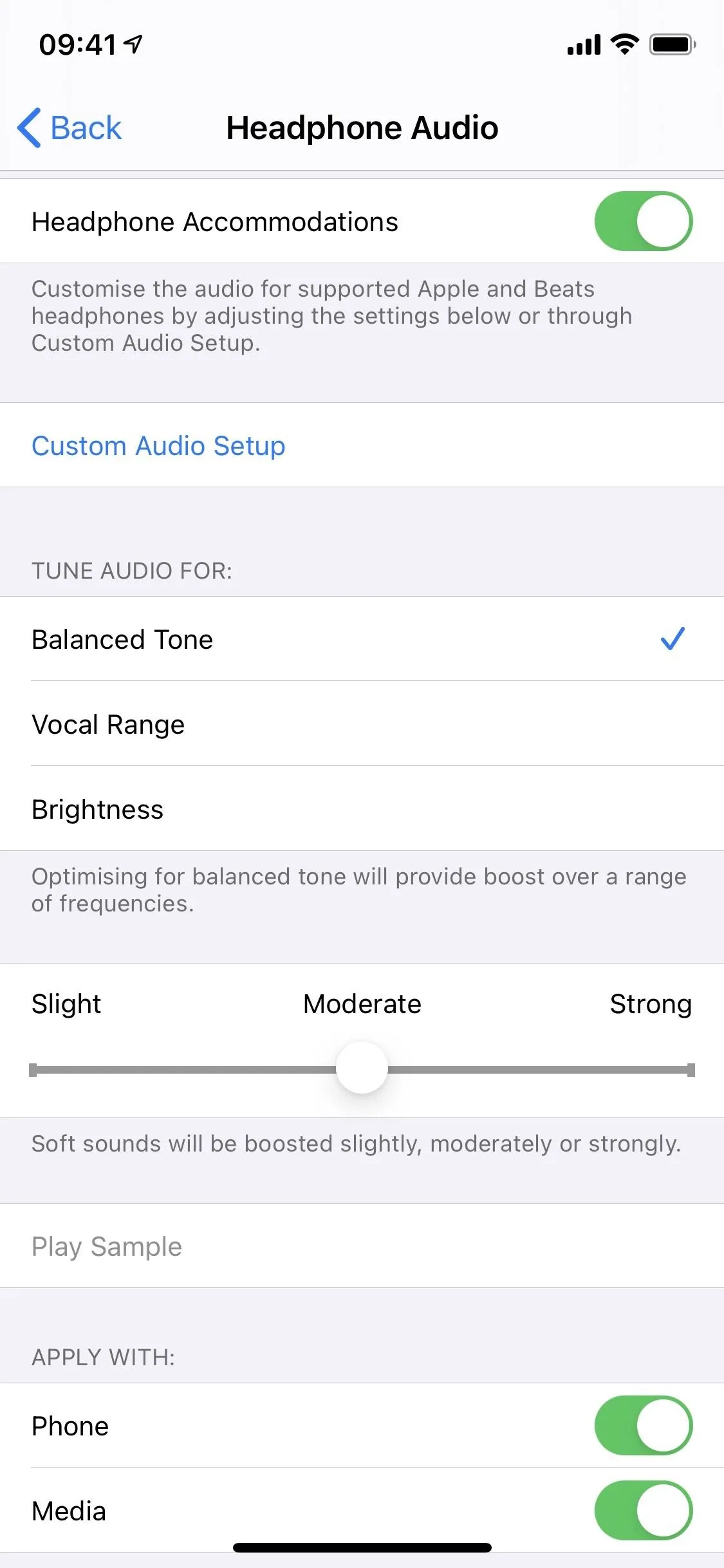

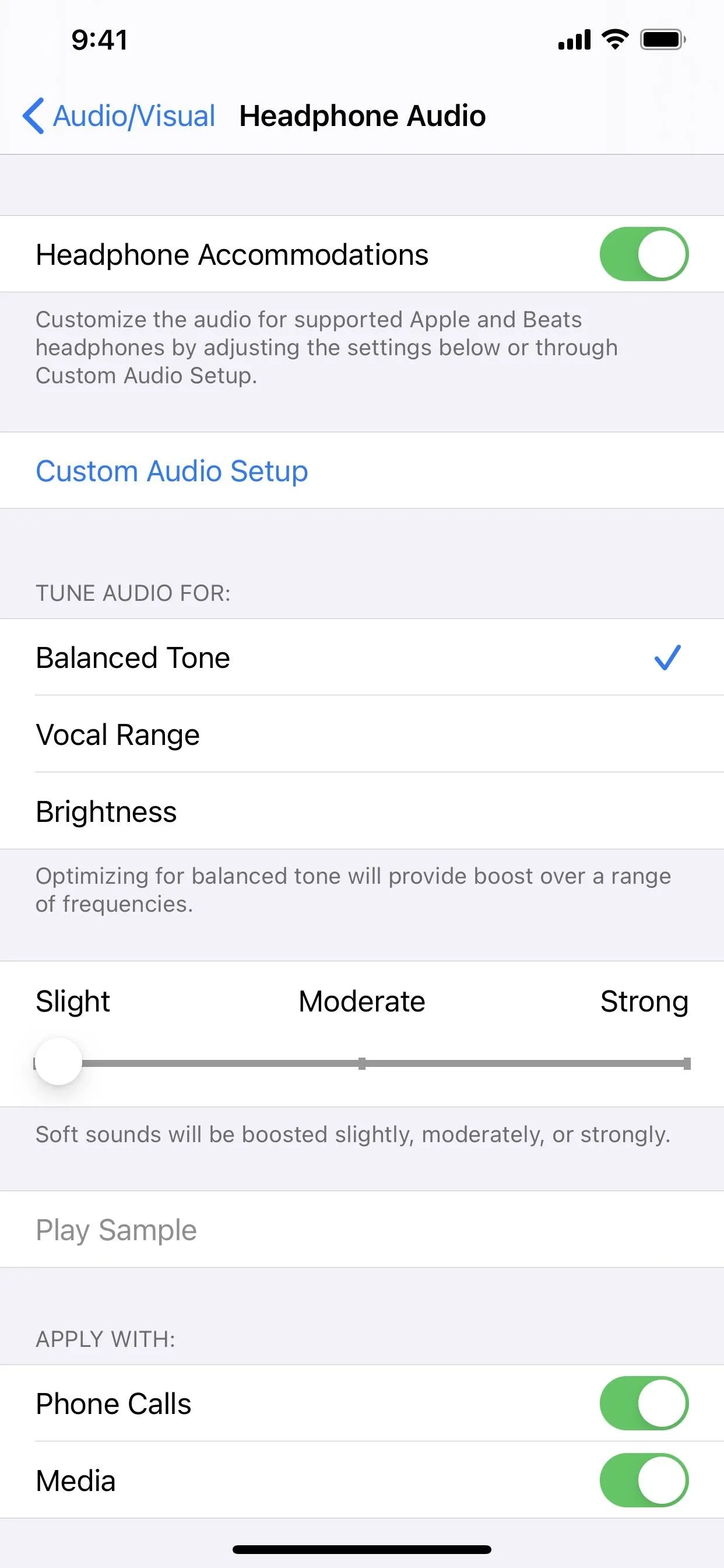

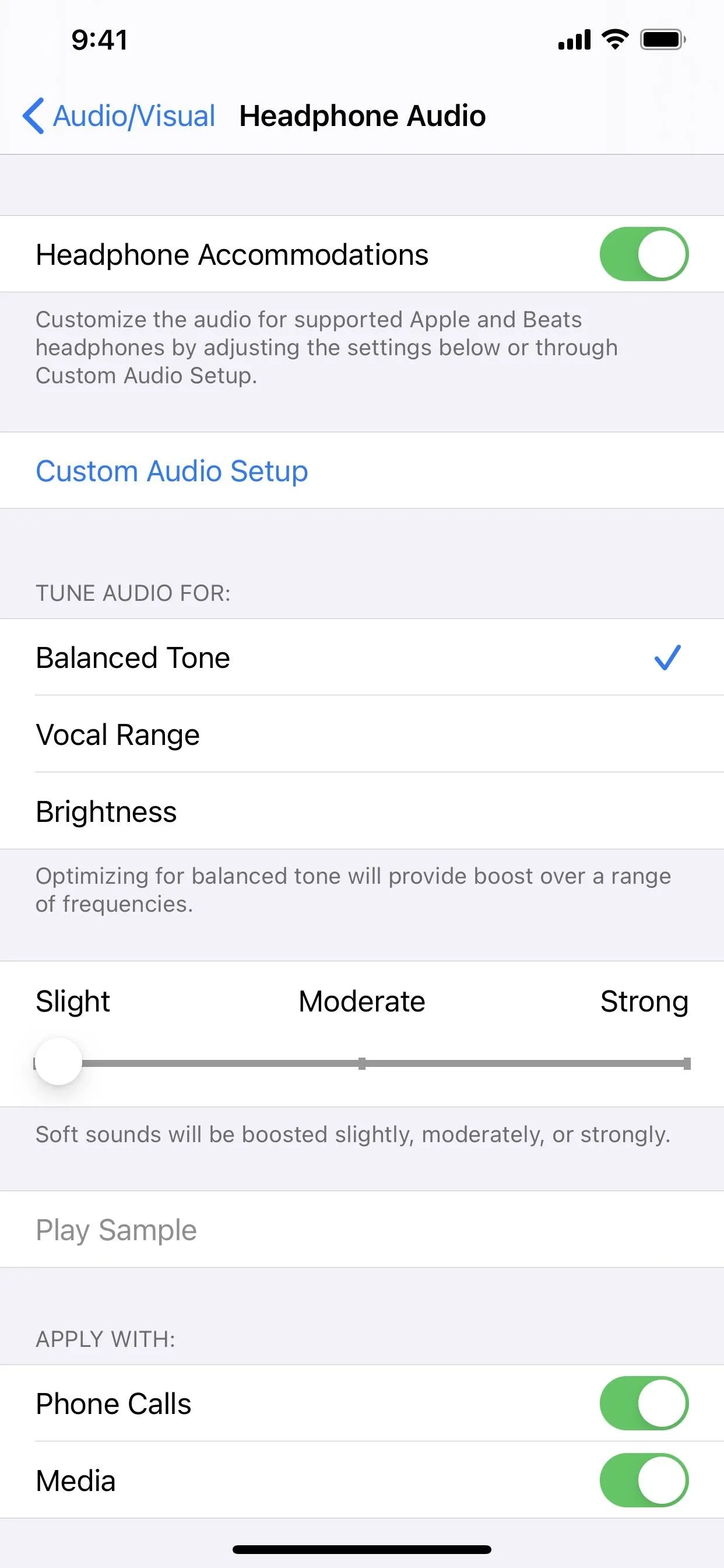

15. Headphone Accommodations Help You Hear Better

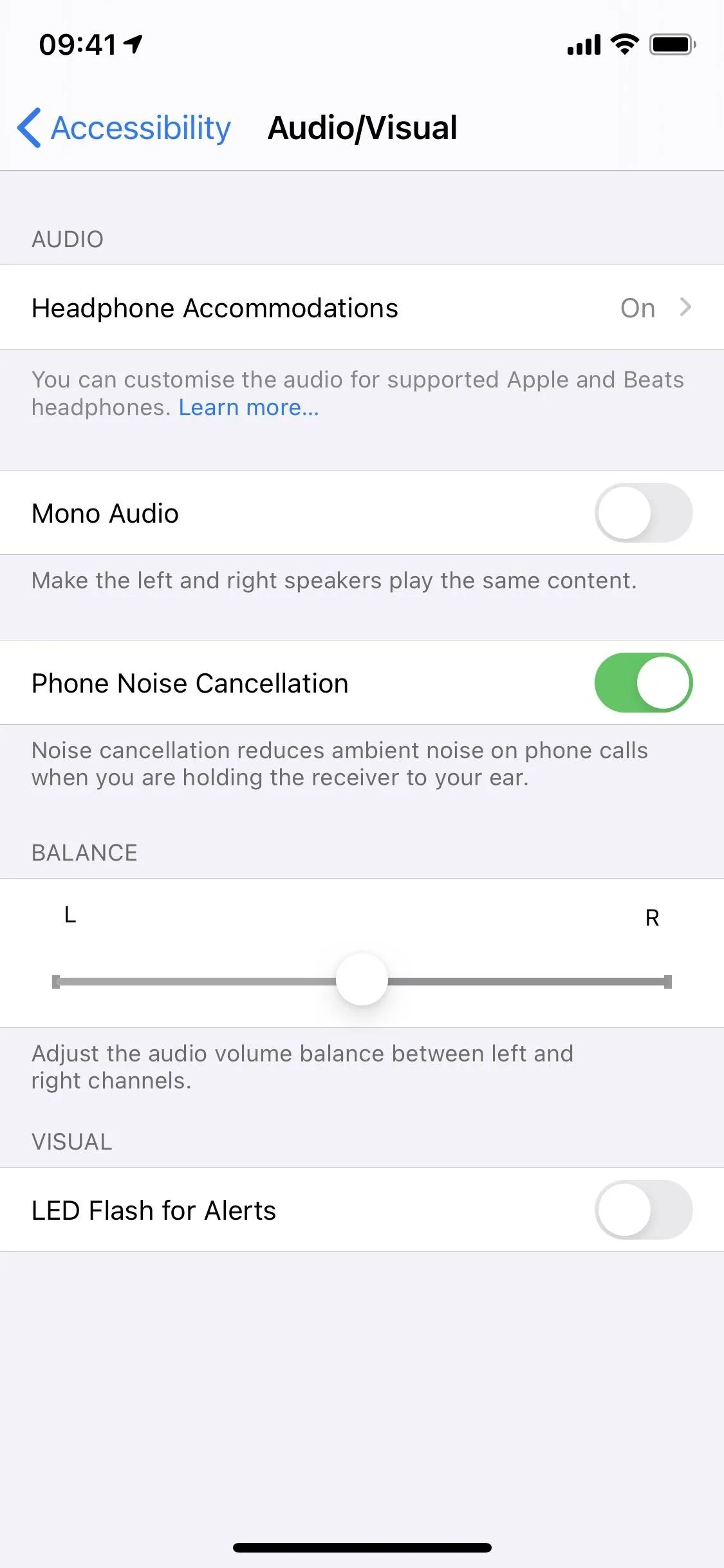

In the "Audio/Visual" accessibility setting, there's a new option called "Headphone Accommodations." When you toggle this on, you get a chance to fine-tune how you hear things in your headphones. And if you have a set of AirPods Pro, it works in conjunction with Transparency mode.

You can change the audio from a balanced tone to one optimized for higher frequency sounds or vocals in the middle frequencies. There's also a slider to adjust how soft sounds are boosted, from slight to moderate to strong. And you can hear these changes as you make them in real-time using the "Play Sample" button. Best of all, you can choose to have these settings apply to media (music, movies, podcasts, etc.) or phone calls or both.

16. And Can Be Further Customized on AirPods & Beats

If you own a pair of AirPods, AirPods Pro, or Beats, there's another option for Headphone Accommodations that lets you create a "Custom Audio Setup." It will walk you through different sounds

One of the best features of Headphone Accommodations is available to select Airpods and Beats headphones. When connected, you can create a custom audio setup that best suits your hearing. AirPods Pro also supports Transparency mode, which improves the audibility of quieter voice around you.

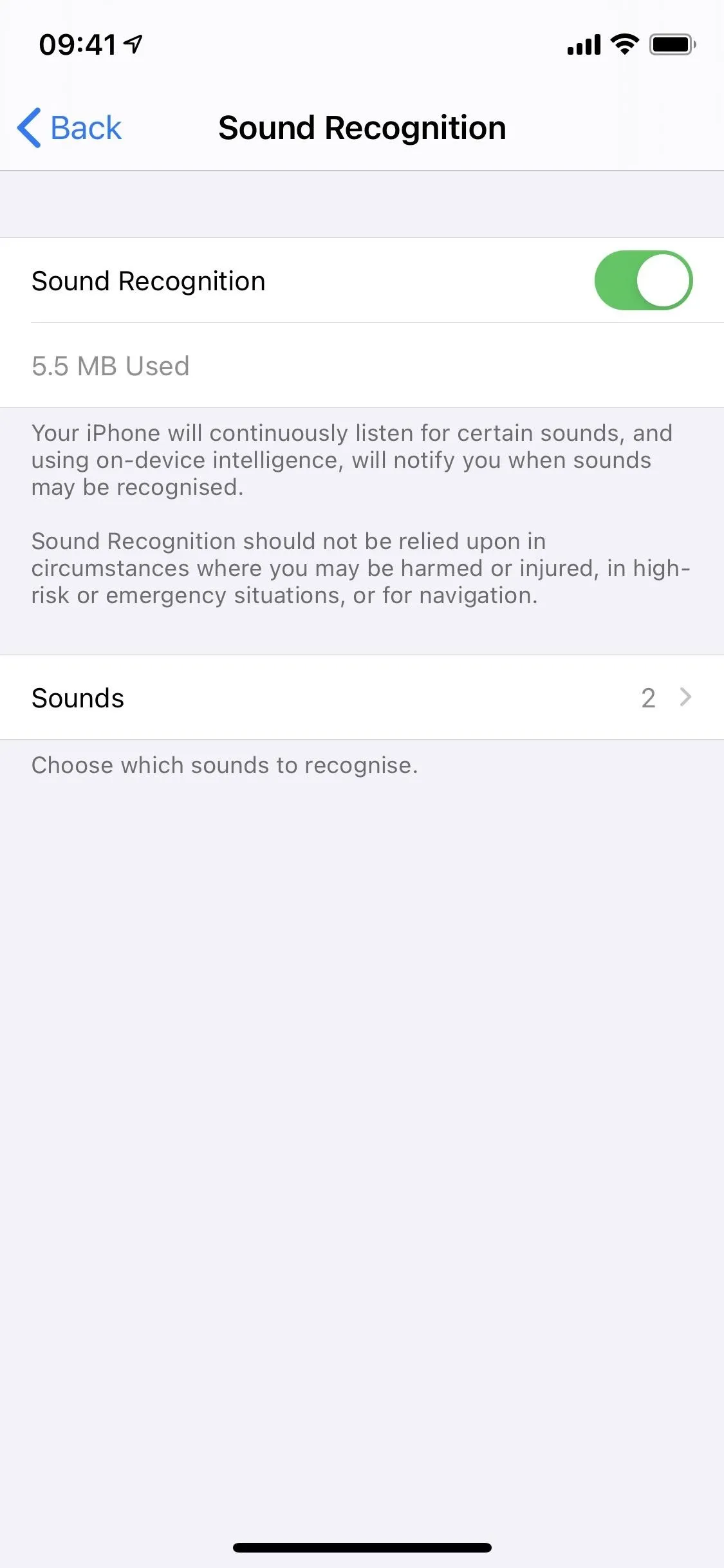

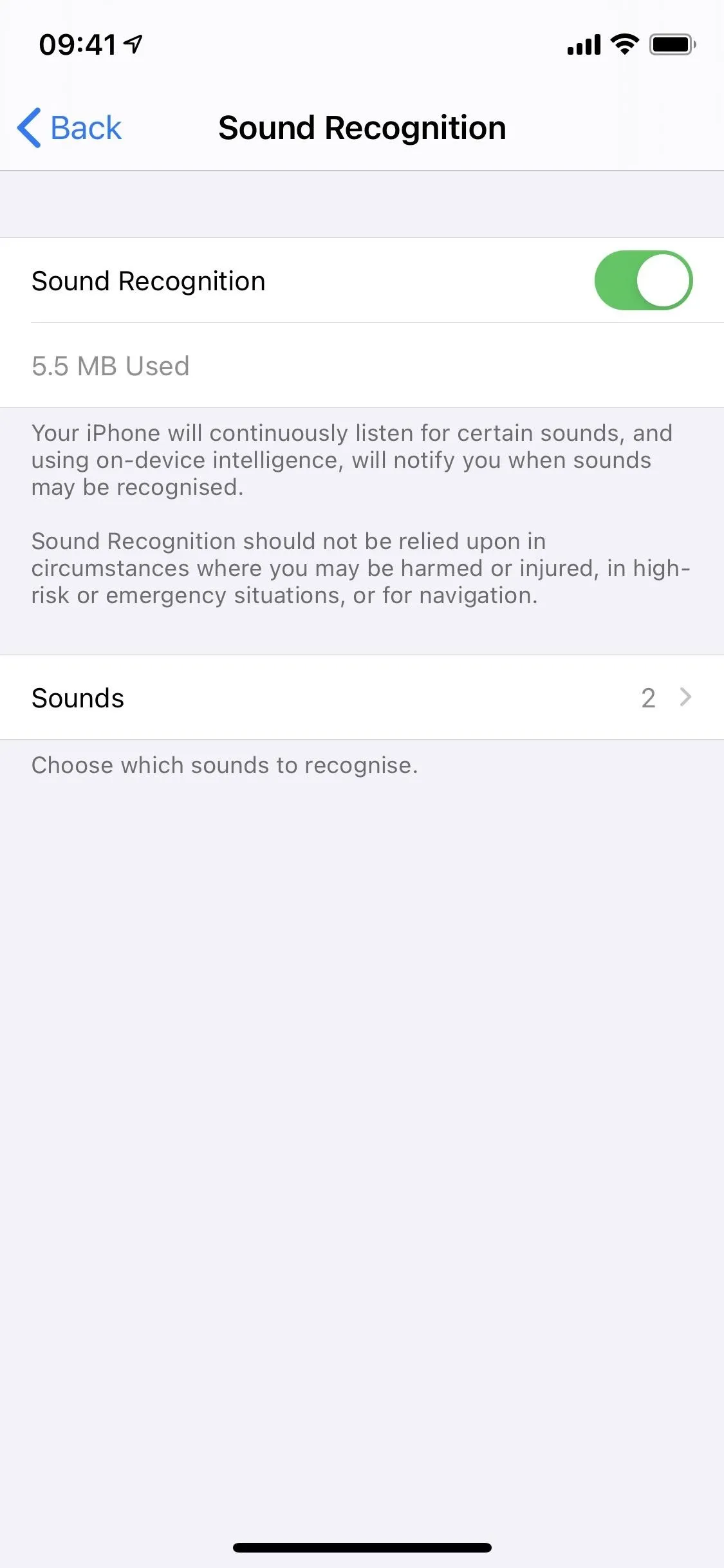

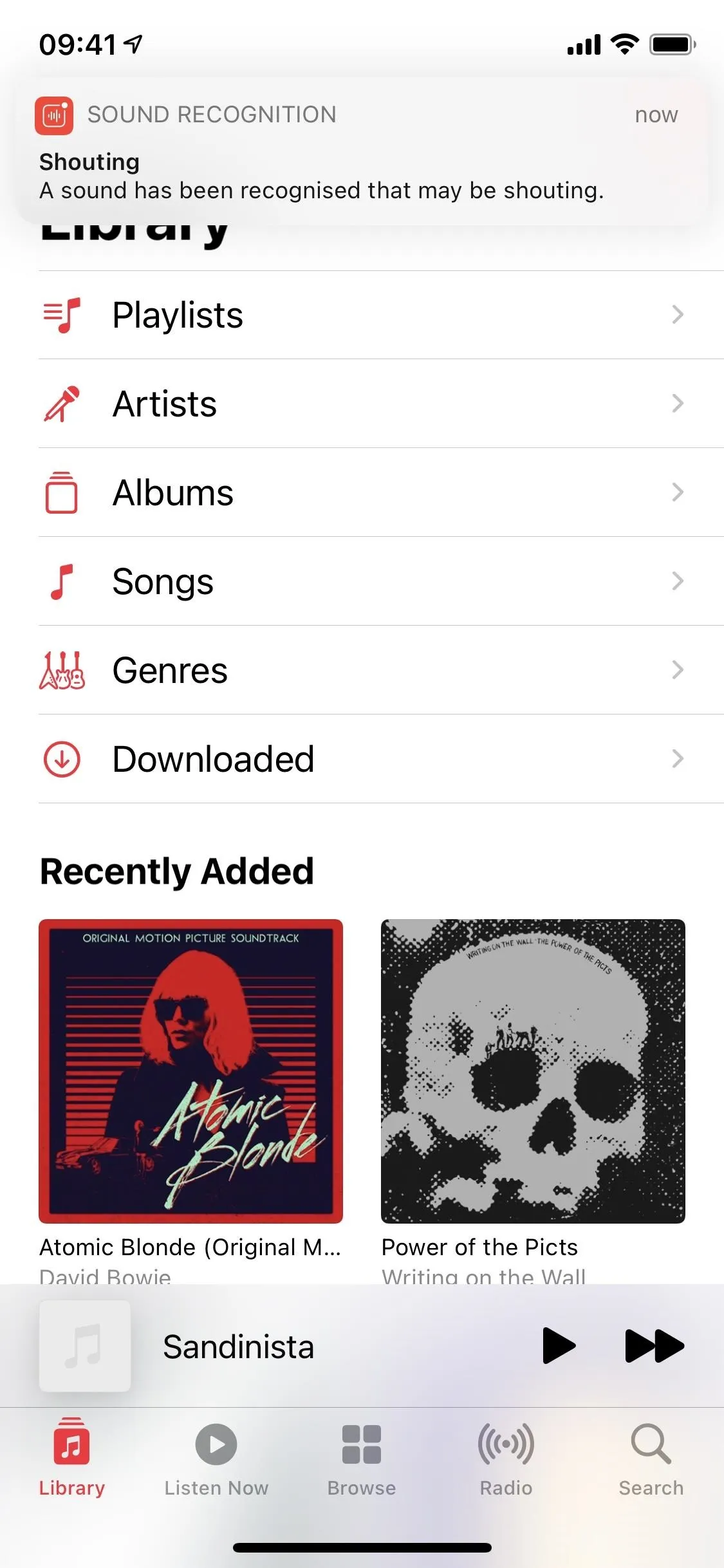

17. Sound Recognition Helps You Hear Important Things

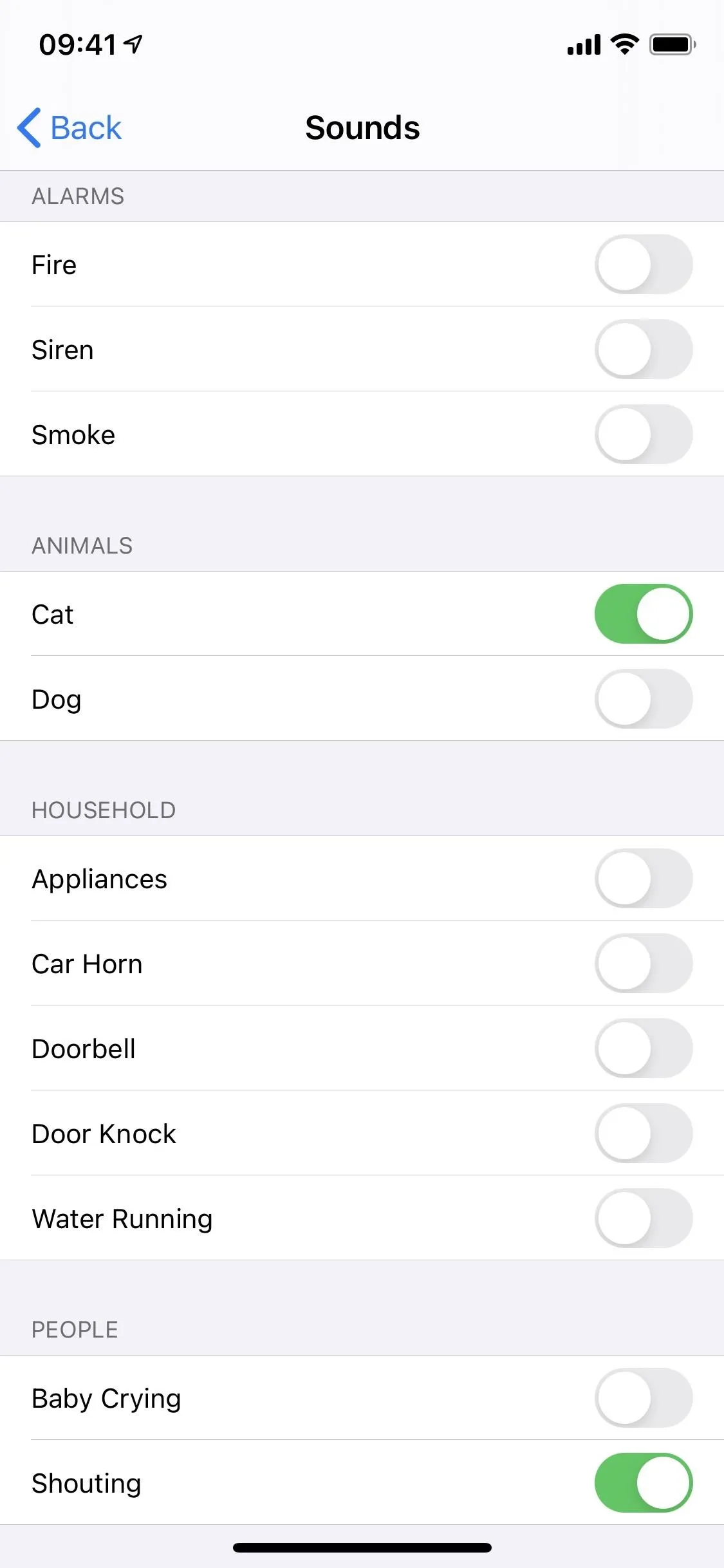

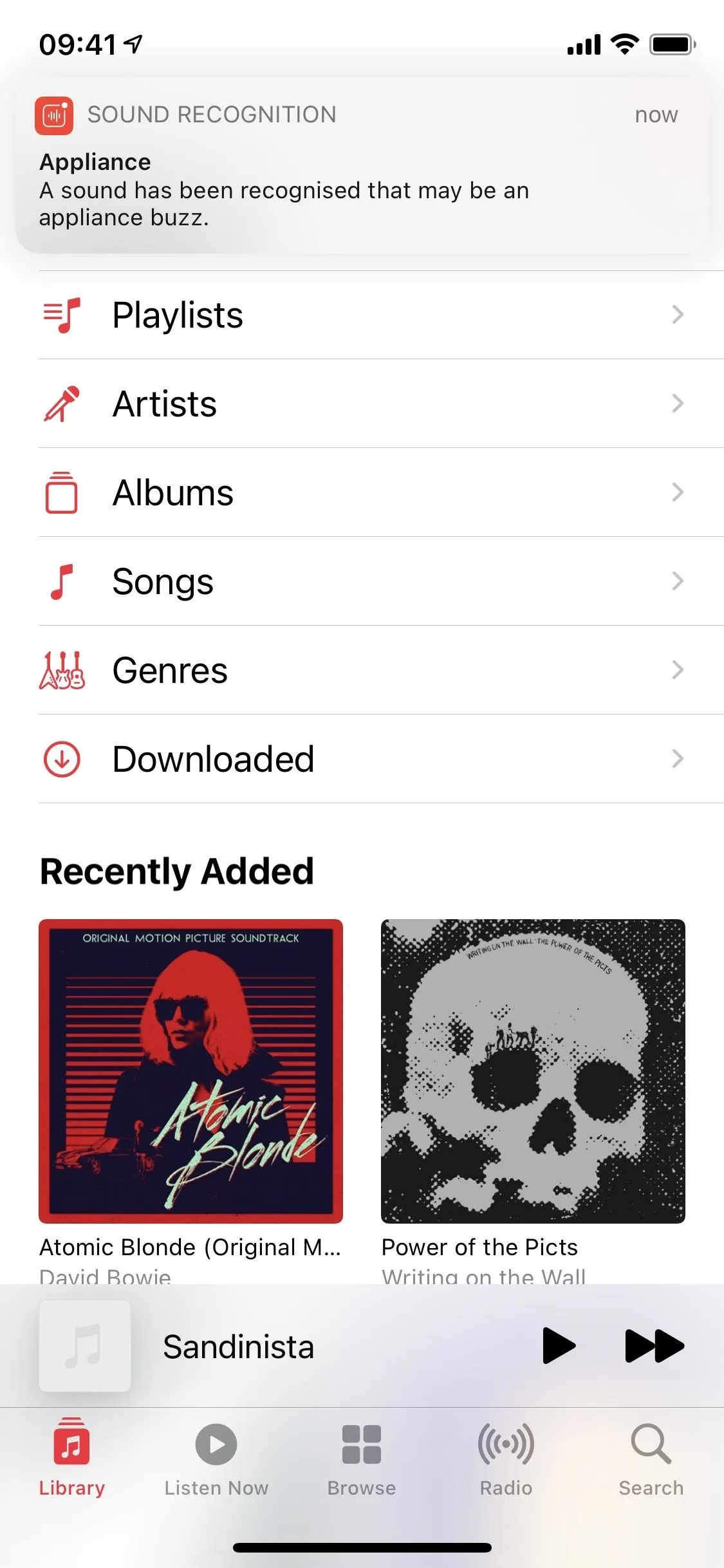

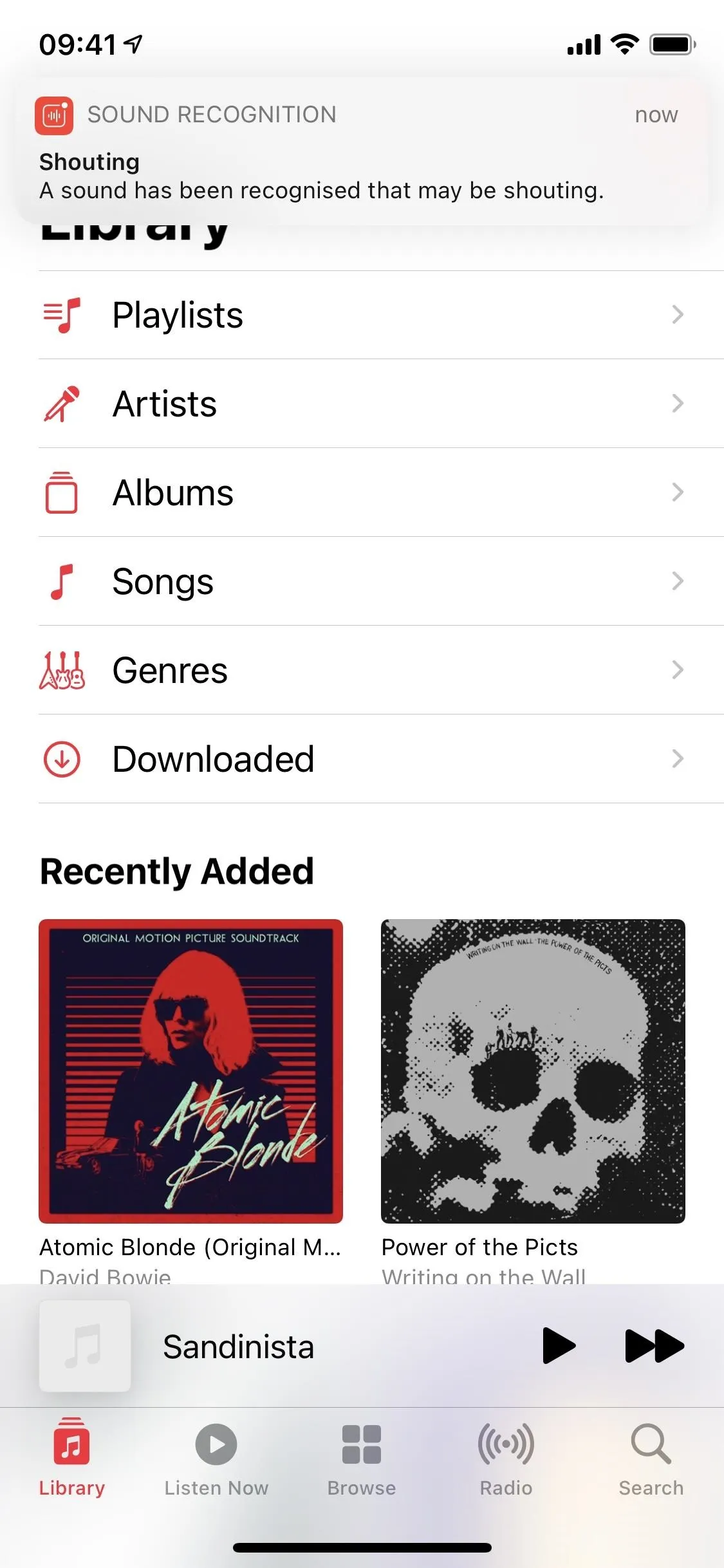

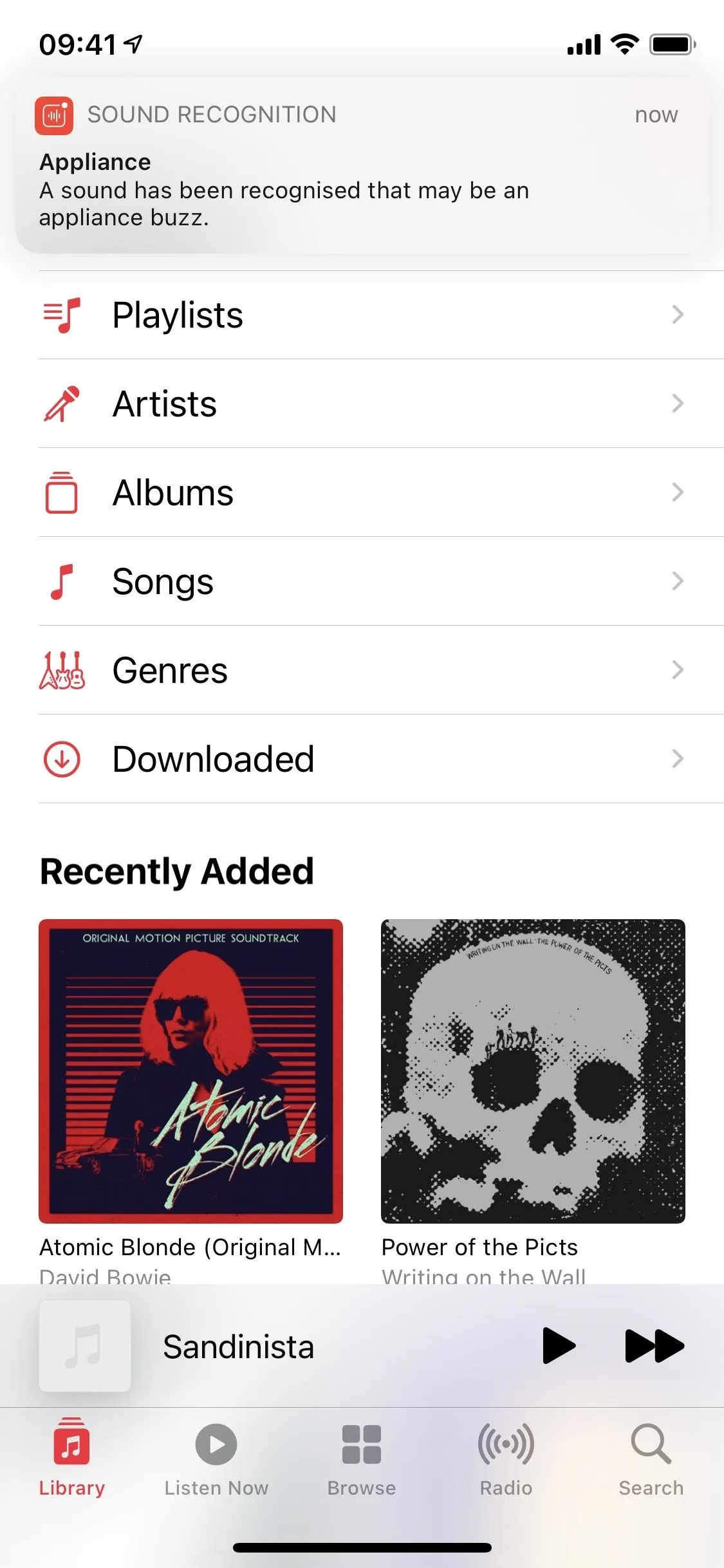

Using the Neural Engine on your iPhone, iOS 14 can detect background audio that may be trying to alert you. When enabled from the Accessibility menu, "Sound Recognition" will continually listen to ambient sounds around you, hunting for specific sounds that you choose — all without draining your battery.

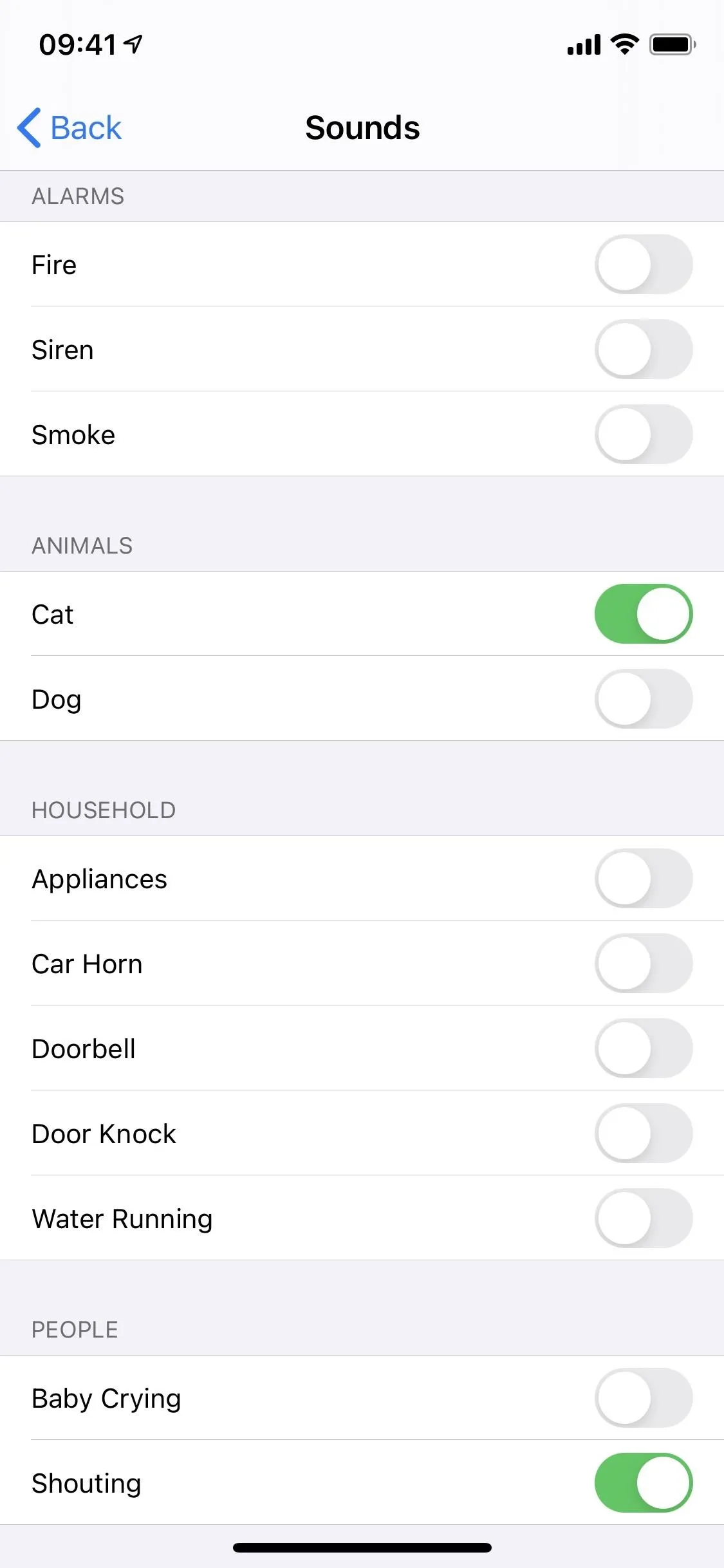

When you turn the feature on, tap "Sounds" and toggle on anything under Alarms (fire, siren, smoke), Animals (cat, dog), Household (appliances, car horn, doorbell, door knock, water running), or People (baby crying, shouting).

These sounds are detected using on-device intelligence, so nothing is being recorded or sent anywhere. When you get an alert, you'll see a notification and may feel a vibration depending on your "Sounds & Haptics" settings.

18. Real-Time Text Lets You Multitask

Since 2017, those with select disabilities could use Software RTT (or real-time text) to communicate with others in a phone call. Unlike in messages, there is no need to hit send since text appears on the recipient's screen as you type it, emulating an audio call. In iOS 14, you can now multitask with this feature. When outside of the Phone app, and therefore outside of the conversation's view, you will be able to receive RTT notifications.

19. FaceTime Detects Sign Language

Usually, when using Group FaceTime, the active speaker will be enlarged unless you disable the feature. But you don't always speak with your mouth, as is the case for people who sign. Starting in iOS 14, whenever FaceTime detects someone using sign language, they'll be emphasized in the video call as the prominent speaker.

Cover photo and GIFs by Jon Knight/Gadget Hacks; Screenshots by Justin Meyers/Gadget Hacks

Comments

Be the first, drop a comment!